Convergence Effect: Why Artificial Superintelligence Will Arrive Faster Than Experts Predict

Note About Today’s Article

I’ve been working on today’s article for months. And I’m happy to finally release it because it adds a crucial missing perspective to the AI discussion I haven’t seen explored anywhere else in as much depth.

It’s a 5,000+ word article that includes 20 curated video clips. Although it’s a beast, it represents the condensing and synthesis of dozens of hours of my time. My intention is to save you time by finding all the info and save you overwhelm by integrating it together. I pride myself on taking the time to deeply research things at a fundamental level so I can think from first principles and share novel paradigm-shifting ideas.

Now on to the article’s topic…

Understanding timelines is arguably the first and most important thing to understand about AI. If you think AI progress is happening fast, you will have a completely different strategy than if you think it’s going slow. That’s why I’ve spent so much time on this topic alone and why I continue to track it.

Because I saw extremely fast AI timelines in 2023, I went full-time into AI in 2024. Because I see even faster timelines now, I’m adopting strategies designed for a completely different AI paradigm than the one we are in today. For example, I’m transitioning into focusing on multi-agent workflows and meta-prompting (prompts that create prompts) rather than just prompting.

Finally, this article talks about Artificial Superintelligence (ASI), which is a profoundly important topic that few people have actually explored, let alone heard about. So buckle in, and let’s go!

Quick Reminder For Subscribers

The paid subscriber AI-First Thought Leadership and Augmented Awakening Course with Anand Rao courses start next week. Save the date.

What You Get In Today’s Post

Free subscribers

5,000+ word article

9 curated video clips

Paid subscribers

As a paid subscriber, you also get…

Bonus content. It includes 5,000+ additional words and 11 additional curated video clips.

My current AI preparation framework. This is my step-by-step action plan that I follow and recommend for what things to do and when. If you do these things, you will be ahead of 99% of people.

Intelligence Explosion Scenario AI Prompt. I collaborated with a professional fiction writer and a dear friend, Bonnie Johnston, to create an in-depth AI prompt to generate and explore hundreds of diverse scenarios for how AGI and ASI will play out. Emotionally and cognitively understanding scenarios will help you pre-adapt you to any future outcome and make smart strategic decisions now. I also share the academic research on why this approach is so powerful.

Bonnie has written 150+ fiction books under various pen names. And, she has infused AI into every part of her process. If you want to work with her for coaching or education on fiction writing, she has capacity to take on 1-2 more clients at the moment. You can reach her via LinkedIn.8 Exponential Explanations. I spent hours clipping and curating 8 video clips by a mathematician, world-class explainers, and futurists about different aspects of exponential growth. The purpose of these clips is to help you understand exponentials at an intuitive level so that you can predict AI timelines better.

In addition to these article-specific benefits, paid subscribers also get $1,500+ in other perks (courses, prompts, templates, etc).

Full Article

In case you didn’t need a reminder that AI is the fastest evolving, most disruptive, technology ever…

Sam Altman, CEO of OpenAI, just penned an essay reflecting on ChatGPT’s two-year birthday. The following paragraph stands out:

We are now confident we know how to build AGI [Artificial General Intelligence—human-level intelligence] as we have traditionally understood it. We believe that, in 2025, we may see the first Al agents "join the workforce" and materially change the output of companies…We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word. We love our current products, but we are here for the glorious future.

—Sam Altman

Interestingly, a senior product leader at Google agrees about the path to ASI (artificial superintelligence):

Finally, one of the top entrepreneurs of all time is even talking about ASI publicly…

Bottom line:

The AI narrative has fundamentally shifted.

Rather than talking about AGI as the next milestone, top AI leaders are now talking about ASI as the next big milestone. To put this in context, just last year, all of the big AI labs were talking about AGI being 3-5 years away, and ASI wasn’t even on the table.

And the crazy thing is that most people have no idea what ASI even means…

ASI Is More Profound Than We Can Even Imagine

To imagine ASI, imagine AI that is 1,000 times:

Faster than us

Smarter than us

More numerous than us

Have trouble imagining this?

That’s the point. ASI is like a singularity that’s hard to see beyond.

Fortunately, I’ve been collecting metaphors and thought experiments to help you at least begin to understand ASI…

#1. 1,000x Faster

Berkeley AI research Andrew Critch breaks down why AI will be so much faster in a tweet:

Over the next decade, expect AI with more like a 100x - 1,000,000x speed advantage over us.

Why?

Neurons fire at ~1000 times/second at most, while computer chips "fire" a million times faster than that. Current AI has not been distilled to run maximally efficiently, but will almost certainly run 100x faster than humans, and 1,000,000x is conceivable given the hardware speed difference.

With this speed differential between AI and humans, we might appear like statues to AI both cognitively and physically. Below is a video that shows what a 50x differential looks like:

Curator: AI Safety Memes, Source: Adam Magyar

Here’s another clip that shows fast physical speed from our perspective:

Curator: AI Safety Memes

Speed up the tech in the clip above a little bit, and you can begin to understand what Elon Musk means when he says:

“In a few years, that bot will move so fast you’ll need a strobe light to see it.”

—Elon Musk

One final thought experiment to understand the importance of speed comes from a podcast interview with theoretical physicist David Deutsch and neuroscientist Sam Harris. Below is the relevant part of the transcript that still sticks with me 9 years later:

Sam Harris: Just consider the relative speed of processing of our brains and those of our new, artificial teenagers. If we have teenagers who are thinking a million times faster than we are, even at the same level of intelligence, then every time we let them scheme for a week, they will have actually schemed for 20,000 years of parent time.

#2. 1,000x Smarter

Think about the intelligence difference between us and ants. Then think about the difference in intelligence between one human and all of humanity. Then, you’ll be close to understanding. Now, think about ASI as being that much further beyond all of humanity combined.

To get a really solid and quick understanding of the magnitude of the implications of ASI, I recommend this video clip from Tim Urban who is one of my favorite explainers in the world:

Source: Weights & Biases Conference

Taking things a step further, in the following clips, Eliezer Yudkowsky, one of the most well-known researchers, writers, and philosophers on the topic of superintelligent AI, provides thought experiments to help us more deeply understand what something 1,000x smarter than us might be like.

Source: Logan Bartlett Show

This second clip is 19 minutes long, so if you’re in a hurry, you might want to come back to this one later if you don’t have time now, but it’s worth watching.

Source: Eliezer Yudkowsky: Dangers of AI and the End of Human Civilization | Lex Fridman Podcast #368

#3. 1,000x More Numerous

Currently, there are about 7.5M people with cystic fibrosis globally, which is about 1/1,000 of the global population. That is the same ratio of what humans to 8 trillion AIs would be like.

Alternatively, imagine walking into Times Square, but instead of seeing thousands of tourists, you see only one or two other humans among a sea of AI entities. Or picture entering a virtual meeting where you're the only biological participant among dozens of AI agents.

Consider how this might transform our digital landscape: almost every social media post, every article, every piece of content would likely be AI-generated. Human-created content would become as rare as hand-written letters are today. We'd need special verification systems just to confirm when something was actually created by a human.

Think about how we currently interact with websites and apps - each one typically represents a single service or company. In a world of superintelligent AI, every digital interaction could involve thousands of independent AI agents working together or competing, each one potentially as capable as an entire modern tech company.

To put this in perspective:

If you posted a question online, you might receive thousands of thoughtful responses within seconds, each from a different AI

Every piece of software could be continuously rewritten and optimized by millions of AI agents simultaneously

The number of AI entities operating in a single cloud data center could exceed the entire human population

This level of AI proliferation would fundamentally reshape our understanding of scarcity, creativity, and human contribution. We'd be living in a world where human-generated ideas and actions would be like rare artifacts in an ocean of AI-driven activity.

Bottom line

ASI will be at least 1,000x faster, smarter, and more numerous. Then, it will grow from there. Here’s what to know about this…

AI is evolving faster than even the most bullish expect

It’s hard to imagine what ASI is, let alone its impacts

The world of work isn’t even ready for AGI, let alone ASI

Most people are just getting the hang of ChatGPT

Most people dabbling in AI are actually falling further behind rather than catching up

People don’t know how to prepare for or react to this type of rapidly evolving technology

Given the magnitude of impact that will come from AGI and ASI, tracking the pace of AI evolution is critical. The faster you think AI is evolving and the more transformative you think it will be…

The more time you devote to AI.

The more quickly you let go of old strategies, mindsets, tools, and approaches.

The more you create longer term strategies that will endure through multiple paradigms of AI progress.

The more you create a strategy based on first principles assuming super advanced AI rather than just tacking AI to your current worldview.

When I first truly grasped that AGI and ASI might be close, I went into the deepest existential shock that I have as an adult. I worried about what I could tell my kids in order to help them prepare for their future. Suddenly, so many of the things I was working on seemed insignificant.

But, here’s the good news. We humans are really good at adapting to change given enough time. As time passed by, I naturally adapted:

I emotionally processed the uncertainty of the future.

I felt comfort in knowing that, no matter what happens, I will always have the present moment and my ability to be with it.

I started coming up with things I could do now to prepare that felt exciting.

I shifted to focusing on AI full-time.

I still get surprised by the pace of AI, but I relate to those surprises in a completely different way.

Now that we understand what’s at stake with AGI and ASI timelines, let’s make things more concrete…

AI In 2025

Past technological progress has made us complacent.

Because progress often happens slower than we expect in the short-term, most people treat AI like any other technology and don’t react quickly or aggressively enough.

This phenomenon of tech going slower than we expect in the short-term is so common that the tech industry has a name for this pattern—Amara’s Law:

We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.

—Roy Amara (technologist)

What’s important to know is that for the last 10 years, AI has defied Amara’s Law at every turn…

10 years ago most people in tech didn’t think AGI was possible.

5 years ago, most people in tech thought AGI was decades away.

Last year, most people in tech thought AGI was 3-5 years away.

Now, many are saying that 2025 is the year of AGI.

Still, the idea of superfast AI progress is a little abstract. It begs the question:

What does it mean in 2025 for AI to evolve faster than in 2024?

The following video clip from one of the world’s most successful investors, Gavin Baker, makes the the answer to the question concrete:

Source: All-In Podcast

The key quote is this one:

AI is going to make more progress per quarter in 2025 than it did per year in 23 and 24.

—Gavin Baker

Said in different ways, if Baker is right, then:

By April 1, 2025, we’ll see as much progress as we saw in 2023 (when GPT-4 was launched) and 2024.

By the end of 2025, we’ll see more AI progress than in the last 8 years combined.

This is a big deal because the last two years of progress have been unimaginably fast. So, if time is like a treadmill, 2023 was walking, 2024 was jogging, and 2025 will be an all-out sprint.

If you’re like me, you don’t like just taking experts’ words for things. You like understanding them yourself. So, for the rest of this article, I will help you fundamentally understand deeper reasons why AI is evolving so fast in the short-term, so you can reason from first principles on your own…

Introducing The Convergence Effect: Why ASI Could Arrive Faster Than We Think

As AI development merges with cultural shifts (moloch, open source, AI-first org charts) and tech advances (neuroscience, neural interfaces, nuclear/solar/fusion energy, biotechnology, cloud computer / distributed systems, quantum computing, and other fields), a synergy is taking place that accelerates AI progress.

The key here is that progress is not just linear or even exponential in a single domain but rather a result of compounding, synergistic effects across multiple fields.

Thus, it’s time to think about replacing Amara’s Law with the Convergence Effect:

Convergence Effect:

When innovations from multiple fields converge and stack upon each other, progress in AI doesn't go slow in the short-term. Rather, it leaps in the short-term, defying all expectations.Here’s exactly how ASI could arrive faster than we think

The Cross-Field Cascade: Dozens of fields are using AI to accelerate AI

The Perfect Storm: Dozens of feedback loops are stacking on each other

The Great Race: The AGI/ASI memes are fueling all of the feedback loops

The Global Brain: The AI open source movement is making it possible for tens of thousands of programmers across the world to collaborate on AI development

The Complexity Blind Spot: Traditional prediction models are failing, because no one can keep track of everything

#1: The Cross-Field Cascade: Dozens of fields are using AI to accelerate AI

What’s important to understand is just how general the AI field is as pointed out in the following clip:

AI might be the most polymathic topic to think about because there's no field or discipline that is not relevant to thinking about AI.

—Gwern Branwan

Source: Dwarkesh Podcast

Said differently, because AI is so fundamental and general, the following feedback loop occurs:

As an example, Nobel Laureate and head of AI at Google Demis Hassabis, explains this feedback loop as it relates to one field—neuroscience:

Here’s a key part of the clip:

[Neuroscience influences AI]

Originally with the field of AI, there was a lot of inspiration taken from architectures of the brain, including neural networks and an algorithm called reinforcement learning...

[AI influences neuroscience]

And I think we'll end up in the next phase where we'll start using these AI models to analyze our own brains to help with neuroscience...[The feedback loop]

So actually, I think it's going to come sort of full circle. Neuroscience sort of inspired modern AI, and then AI will come back and help us, I think, understand what's special about the brain.

—Demis Hassabis, Head Of AI At Google

And, in a tweet, Hassabis explain the same feedback loop between computer chip design and AI progress:

Feedback loop:

Train SOTA chip design model (AlphaChip) ->

Use it to design better AI chips ->

Use them to train better models ->

To design better chips

…

These two loops are just the beginning. Below are two impartial lists of nearly 20 different fields directly and indirectly related to AI that could feasible cause the overall pace of AGI to come sooner.

Subfields within AI that accelerate AI progress and are impacted by AI

AI Scaling Laws: Larger models and more compute lead to emergent behaviors, accelerating the path to AGI without the need for new architectures.

AI Prompting: More people are realizing new ways to prompt AI that illicit more intelligence.

Self-Improving AI: AI systems that can improve their own code and architecture will iterate faster than human developers, pushing AI toward general intelligence.

Reinforcement Learning & Multi-Agent Systems: Training AIs to learn complex tasks and collaborate with other agents can lead to generalized intelligence that mimics human-like problem-solving.

Transfer Learning & Few-Shot Learning: AI models that generalize knowledge across tasks and domains will reduce the need for massive datasets, enabling faster adaptability toward AGI.

AI-Augmented Research: AI systems can accelerate scientific discovery, unlocking breakthroughs that further enhance AI's own capabilities.

AI-Generated Code (AutoML): Automating AI model improvements will speed up algorithmic development and optimization, moving us closer to AGI faster than human labor alone.

Synthetic Data & Simulation Environments: AI systems can train on diverse, realistic data and simulated environments, helping them develop general intelligence-like capabilities.

Collaboration Between AI Models: Collective intelligence from multiple AI systems can produce breakthroughs greater than any single AI system, potentially leading to AGI.

Large Language Model Improvements: Enhancements in reasoning, memory, and context in large models like GPT-4 will drive increasingly general AI abilities.

Edge AI and Distributed AI: Decentralized AI learning from real-world environments in real-time can help develop more adaptable and general systems quickly.

Non-AI Tech Fields Impacted By AI That Impact AI

Biotechnology & Genomics: Understanding human cognition and genetics can inspire AI models that emulate biological intelligence, advancing AGI development.

Advanced Materials Science: Breakthroughs in materials like superconductors or graphene can enable faster, more energy-efficient hardware necessary for AGI's computational demands.

Energy Storage & Efficiency: Improvements in battery technology and energy efficiency will support the massive computational power AGI systems require, making them sustainable.

Edge Computing & IoT: The global network of decentralized data collection and real-time processing will feed AI systems with diverse, continuous learning environments.

Automation in Advanced Manufacturing: Automating the production of AI hardware will allow for faster scaling of AGI-level systems, reducing human bottlenecks in development.

Telecommunications & 5G Infrastructure: Faster, low-latency global networks enable real-time data transfer and AI collaboration, crucial for training and refining AGI systems.

Cybersecurity & Cryptography: Secure AI systems that can process decentralized data safely will unlock larger datasets and greater trust, accelerating AGI’s deployment.

Human-Computer Interaction (HCI): Enhanced interfaces will allow humans to better collaborate with AI, speeding the integration and refinement of AGI in real-world settings.

Bottom line: AGI development isn't happening in a vacuum. It’s fueled by progress in dozens and soon hundreds of fields. You can think of AGI and ASI as the Manhattan Project of the 21st century, but rather than it happening among a few scientists in the US in a centralized way, it’s happening collaboratively with millions of people across the world.

#2: The Perfect Storm: dozens of feedback loops are stacking on each other

Positive feedback loops result in exponential change.

And exponential change is critical to understand at the same time it is difficult to understand.

Let me break it down.

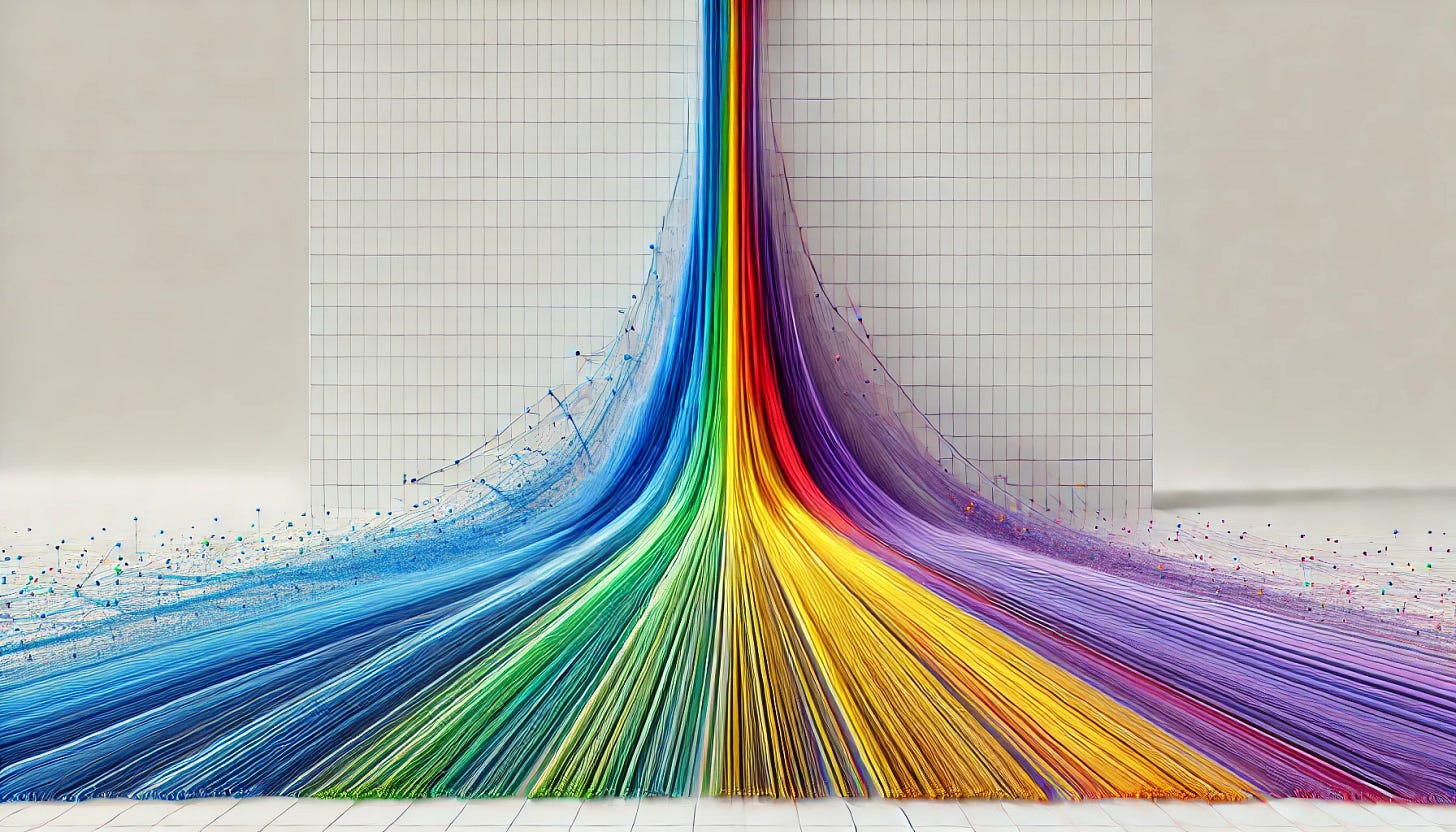

Exponentials start deceptively slow and look like linear change.

But, once they hit the elbow of the curve, change happens unimaginably fast.

The visual below paints a perfect picture of the shift from deceptive to disruptive…

This deceptiveness is why many people initially overlooked many of today’s most important exponential trends and viewed them as fads...

Social Media: Initially seen as frivolous, platforms like Facebook and TikTok now shape politics, culture, and personal identity.

The Internet: Initially a tool for researchers and niche enthusiasts, the internet exploded into a global platform that disrupted communication, commerce, entertainment, and knowledge-sharing on an unprecedented scale.

COVID-19 Pandemic (not a technology): Initially perceived as a localized outbreak, the pandemic disrupted global health, economics, and society, accelerating remote work, e-commerce, and vaccine innovation.

Many technologists have painstakingly tried to explain the implications of exponential change—mostly to deaf ears. It’s one thing to sort of understand exponentials on a theoretical level, but it’s another to act on it early and consistently.

That’s why I spent several hours collecting video clips of researchers, mathematicians, futurists, and technologists explaining exponential change, and I collated them at the bottom of this article for paid subscribers so you can train your intuition.

Now, what’s important to understand about the Convergence Effect is that all of the feedback loops I mentioned earlier in the article are stacking on each other. Below is just a small sampling of examples…

AI Research Feedback Loops:

Better AI tools → Faster scientific paper analysis → Quicker discovery of insights → Better AI development

AI-powered hardware design → Specialized AI chips → More efficient training → Better AI models

AI code optimization → Faster model training → More iterations → Better optimization techniques

Better AI → Automates tasks → Economic impact → AI investment → Better AI

Better AI → Automates tasks → Faster hardare progress → cheaper compute → AI investment → Better AI

Better AI → Automates tasks → Faster algorithmic progress → Better AI

Knowledge & Data Loops:

Better language models → Improved knowledge extraction → Enhanced training data → Smarter models

Multimodal AI → Better real-world understanding → More diverse training scenarios → More capable AI

AI-enhanced data collection → Richer datasets → Better model training → Superior data collection

Economic & Social Loops:

AI productivity gains → More investment in AI → Faster development → Greater economic returns

AI education tools → More AI-literate workforce → Faster AI adoption → Better AI education

AI research automation → More researchers can contribute → Faster breakthroughs → Better research tools

Biological & Cognitive Loops:

AI brain mapping → Better neural architectures → More brain-like AI → Better neuroscience tools

AI drug discovery → Enhanced cognitive enhancement → Smarter researchers → Better AI development

Brain-computer interfaces → Direct AI-brain learning → Better understanding of cognition → Improved AI models

Infrastructure Loops:

AI-optimized cloud computing → More efficient training → Better models → Improved infrastructure design

AI network optimization → Faster distributed training → Better collaborative AI → Enhanced networking

Quantum computing advances → New AI capabilities → Quantum-enhanced learning → Better quantum systems

Bottom line:

Together, these technologies are converging to create an ecosystem where progress in one area amplifies advancements in others. This convergence doesn’t just add to the pace of progress—it multiplies it, accelerating us toward AGI far faster than linear predictions would suggest.

This compounding effect means that each step toward AGI amplifies the speed and scale of the next breakthrough. The more advanced AI becomes, the more it can assist in its own evolution—whether by improving its own architectures, finding new training methods, or solving complex problems in real-time that once took months of human work. This feedback loop could turn the trajectory of AGI from gradual improvement to a rocket-like acceleration.

Not only that, when different technologies converge, we’re not just getting faster algorithms. We’re potentially looking at entirely new paradigms of intelligence that leap beyond human cognitive limitations. These synergies could lead to innovations that aren’t simply faster or better but fundamentally different from anything we’ve seen before.

Finally, AI actually reduces the silos between fields. It allows experts to prompt AI to find adjacent fields and extract relevant insights. Said differently, not only are the number of advances in fields increasing, the ability for fields to learn from each other is increasing.

#3: The Great Race: The AGI/ASI memes are fueling all of the feedback loops

While the feedback loops above exist, they still need fuel to run. That fuel comes in the form of money and talent flooding into the AI, which then causes the AI field to take off like a rocket ship.

Together, these steps create an unstoppable cultural feedback loop:

Said differently, AGI/ASI is the most powerful meme in human history.

Here’s the structure of the AGI/ASI meme in a nutshell:

We are birthing an intelligence whose power will be so profound that it’s impossible for us to comprehend.

It will be able to do anything that a human can do, but faster and better.

It is coming faster than we expect.

It could bring on an unprecedented era of abundance or cause humanity to go extinct.

For leading AI companies, entrepreneurs, researchers, and countries, it’s the biggest opportunity and threat ever.

Being a little bit ahead of others can be the difference between success and failure.

Companies and countries can’t help but compete, or they could be left behind.

Whether this meme is technically true or not, what’s key to understand is that it’s a self-fulfulling prophecy. Because the leading companies believe it’s true, they are in an all out cold war with all of their resources to make it happen first. The result is enormous progress. Outside of the leading AI labs, more and more industries and professions are similiarly orienting themselves around AI.

As AI progress continues to accelerate faster than expected, individuals, companies, and soon countries will scale their investment in AI exponentially. To contextualize where we are right now, just this week, Microsoft said it will invest $80 billion into AI-enabled data centers in 2025. $80 billion! Let that sink in. When countries truly get involved, the scale of investment will likely increase by another order of magnitude.

In some ways, AGI/ASI is the biggest X-Prize in human civilization. A few teams with billions or tens of billions of dollars in the bank are pushing the frontier to try and win the “prize” as the gobble up any team, talent, or idea that could give them an edge.

Another way to think about AGI is that its a memetic black hole. As it gets closer, more and more people put more attention and urgency on it. Thus the gravity of it is pulling stronger and stronger.

#4: The Global Brain: The AI open source movement is making it possible for tens of thousands of programmers across the world to collaborate on AI development

While leading AI labs like OpenAI, Anthropic, and Google command massive resources and attention, a parallel revolution is brewing in the open source community that could fundamentally alter the pace of AI progress.

To understand why, we need to look at three key dynamics:

First, there's a David vs. Goliath story unfolding.

Leading AI labs have billions in funding, massive compute resources, and some of the world's top AI talent. But the open source community has a different kind of strength: tens of thousands of researchers and developers worldwide, all building on each other's work in real-time, experimenting with novel approaches, and sharing their findings openly.

This difference in approach is crucial. The power of open source isn't just about having many people—it's about having many perspectives. When tens of thousands of researchers try different approaches simultaneously and build on each other's work, they create a kind of collective intelligence that can sometimes outperform even the most well-funded closed efforts (see Superforecasting for research on the power of crowds).

Second, the open source community is showing increasing sophistication in both organization and output.

Projects like Llama, Stable Diffusion, Qwen, DeepSeek, Cosmos, and Mistral aren't just catching up to closed-source models—they're pushing the boundaries in their own right. The gap between open and closed source capabilities is narrowing faster than many expected.

Third, and perhaps most importantly, there's a scenario where open source could actually leapfrog traditional labs.

If pre-training scaling (just making models bigger by investing tens of billions in data centers) hits diminishing returns, the key to progress will shift from raw computing power to architectural innovations and novel approaches. This is where the open source community's diversity and ability to rapidly experiment could become a decisive advantage.

We're also seeing an interesting hybrid model emerge. Major tech companies are increasingly open-sourcing their models and tools, recognizing that the benefits of community involvement often outweigh the competitive advantages of keeping everything proprietary. This creates a multiplier effect where corporate resources meet open source innovation.

The result is a new kind of innovation engine that could fundamentally change how quickly AI advances. Instead of progress being driven primarily by a few well-funded labs, we're moving toward a model where tens of thousands of contributors worldwide can meaningfully participate in and accelerate AI development.

This democratization of AI development isn't just making progress faster—it's making it more unpredictable. When innovations can come from anywhere, and when thousands of researchers can immediately build on each new breakthrough, the pace of progress becomes much harder to forecast.

The open source movement in AI isn't just another path to progress—it's a force multiplier for every other trend in the field. Each breakthrough, each new tool, each novel approach becomes a building block that thousands of researchers can immediately use and improve upon.

#5: The Complexity Blind Spot: Traditional prediction models are failing, because no one can keep track of everything

Predicting AI progress is difficult on multiple levels:

Quantity: The sheer number of innovations feeding back into each other is difficult to track.

Complexity: It’s difficult to understand innovations in fields that specialists are not expert in.

Exponential Curve Blindness: Most of our ancestral life was lived in a linear environment. So our intuition is fitted to a linear world. Thus, exponentials are counterintuitive.

Said differently, it’s hard enough for people to understand how one single exponential like Moore’s Law can change the world. It’s virtually impossible to understand double, triple, and nth-degree exponentials.

History has shown us that progress often happens in sudden leaps after long periods of gradual buildup. This non-linear dynamic is particularly important when multiple advancing technologies interact, as their combined potential can produce breakthroughs at an unpredictable, accelerated pace.

The more we rely on outdated, linear models of progress, the more we risk underestimating just how fast AGI could arrive. Think of it less as a steady climb up a hill and more like reaching the top of a rollercoaster—there’s a slow buildup, but once we hit the tipping point, the descent is rapid and unstoppable.

To Summarize

The Convergence Effect is a confluence of 5 different factors coming together to speed up the pace of AI progress faster than we can process:

The Cross-Field Cascade: Dozens of fields are using AI to accelerate AI

The Perfect Storm: Dozens of feedback loops are stacking on each other

The Great Race: The AGI/ASI memes are fueling all of the feedback loops

The Global Brain: The AI open source movement is making it possible for tens of thousands of programmers across the world to collaborate on AI development

The Complexity Blind Spot: Traditional prediction models are failing, because no one can keep track of everything

Each of these factors alone would be significant, but their combination creates a perfect storm for rapid AI advancement. The Convergence Effect suggests that AGI and ASI might arrive not just sooner than expected, but potentially far sooner than even optimistic predictions suggest. This has profound implications for how we prepare as individuals, organizations, and as a society.

What’s even crazier is that current AI systems do not currently have the ability to recursively self-improve (RSI) themselves by editing their own code. But, leading AI labs and the open source community already have their eye on this milestone. Below is Mustafa Suleyman, the CEO of AI at Microsoft sharing his prediction that RSI will arrive in 3-6 years:

Once RSI happens, AI progress could truly take off.

Interestingly, Sam Altman has a similar timeline for ASI.

Conclusion

Will AGI or ASI happen sooner than we think?

In the end, no one knows for sure. Every expert is basing their projections on limited data and processing ability.

But, what’s increasingly becoming clear to me is that:

There is a very good chance that AGI/ASI could arrive sooner than we think.

That uncertainty should not be a reason for complacency.

The fact that AGI and ASI are very real possibilities and that the consequences are so high should be a catalyst for urgency.

If AGI could emerge much sooner than expected, this creates a pressing need for knowledge workers, decision-makers, and policymakers to take the timeline seriously. The career landscape could shift in ways that render current skills and roles obsolete overnight. Those who are unprepared may find themselves in debt from education as they struggle to find work and make meaning of their future.

Conversely, those who recognize the signs of accelerating convergence can position themselves to thrive in a world where AGI reshapes industries, decision-making processes, and entire economies. The future isn’t just arriving—it’s hurtling toward us faster than we think. And the difference between those who see it coming and those who don’t will define the next era of human achievement.

And, if Artificial Superintelligence happens sooner than we think… Well, frankly, I’m not sure exactly what to do about it. No one is. But, if I had a magic wand, I would pause ASI until we know that we as a society are 100% ready for it because we only get one chance to get it right.

Given that I don’t have this wand, I’m doing everything I can to understand and prepare for it. And that’s what the next section is about…

3 PAID MEMBER PERKS: PRACTICAL THINGS YOU CAN DO NOW TO PREPARE FOR THE FUTURE OF AI

Once you understand the shocking speed of AI timelines and the profundity of ASI, the question arises:

What should I do right now to prepare for the future?

I got exposed to AGI and ASI early, because one of my best friends, Emerson Spartz, is the co-founder of an AI Safety nonprofit. So, I’ve been aware of the possibilities for about 4 years, and have been very active for about 2 years.

In this section for paid subscribers, I share:

My current AI preparation framework: This is my step-by-step action plan that I follow and recommend for what things to do and when. If you do these things, you will be ahead of 99% of people.

Intelligence Explosion Scenarios: I worked with a professional fiction writer to create a prompt to generate and explore hundreds of diverse scenarios for how AGI and ASI will play out. Emotionally and cognitively understanding scenarios helps pre-adapt you to any future outcome and make smart strategic decisions now. I also share the academic research on why this is so powerful.

8 Exponential Explanations: These videos will help you understand exponential progress at an intuitive level. I spent many hours curating and clipping them. Understanding exponentials is fundamental to understanding AI timelines.