The Largest Religion In 10 Years Won’t Be Christianity, Islam, Buddhism, Atheism

We are on the cusp of a new religious age brought on by AI, but most people don’t realize it…

PERSONAL NOTE

When future generations look back on this moment, workflow automation will be the least interesting aspect of AI. Yet, that’s how it’s most used today.

With this blockbuster manifesto, I take a step back to explore AI on a more profound, timeless level that is almost never discussed. While most people judge AI by its Utility (what it can do), this manifesto judges AI by its Teleology (what it means).

After three years of going down the AI rabbit hole full-time, I now believe that the “Religion of ASI” may be one of the most important legacies of AI that future humans will reflect on when looking back on this period. Yet almost no one talks about it today.

I spent dozens of hours researching and writing this manifesto and went through dozens of drafts to get it to a blockbuster level that I’m proud of. Now, I’m super excited to share it with you. Using AI, I was able to reduce something that would’ve taken me hundreds of hours into dozens of hours.

SPECIAL THANKS

Special thank you to Kelvin Lwin. We collaborated on a technology and religion article in 2019. That collaboration helped pave the way for this manifesto.

READING GUIDE

What Free Subscribers Get

Free subscribers get the first two chapters (4,800 words)—condensed for maximum readability:

Chapter #1: The Self-Fulfilling Prophecy That’s Building God

Chapter #2: The Prophecy Hiding in Plain Sight

What Paid Subscribers Get

For subscribers who want to go deeper and maximize the book’s impact with:

5 additional chapters

A 90-minute Masterclass with the top five prompts I used the most to create the Manifesto

A Deep Learning Appendix that is written in a chapter format for people who want to go deep on the nuances in order to deepen their understanding

Perk #1: Additional Chapters

Chapter #3: Why Religious And Spiritual Responses To ASI Are Inevitable

Chapter #4: The Harbingers Are Already Here For What Happens Next

Chapter #5: Three Deep Psychological Biases Triggered By ASI Will Cause Religious Feelings

Chapter #6: There Are 3 Stages To Founding A Religion, According To Research. We’re At Stage 2.

Chapter #7: The Holy War Over ASI Has Begun, And It May Get Violent

Chapter #8: ASI Isn’t Just A Religion. It’s A New Type Of Religion

Chapter #9: The #1 Practical Takeaway—Architect Your Own Meaning. Don’t Outsource It To Silicon Valley (coming soon)

Perk #2: Masterclass—Top Five Prompts I Used The Most To Create This Manifesto

To accompany the Manifesto, I taught a 90-minute masterclass where I walked participants through the top five prompts I used to deepen my learning and improve my writing. These are the 20% of the prompts that made 80% of the difference after testing hundreds of prompts over three years.

By the end, participants walked away with:

Prompts they can use over and over for everything they write.

A deep understanding of the prompts so they remember to use them frequently and feel comfortable customizing them to their needs.

Paid subscribers get immediate access to the full class and accompanying prompts.

Perk #3: Deep Learning Appendix

These aren’t normal book supplements. They are a field guide to deep understanding and personal transformation based on the ideas in the manifesto.

Appendix A: Why AI Researchers Believe That ASI Is Coming Soon

Appendix B: Religious Frameworks That Help Predict And Prepare For ASI

Appendix C: Why ASI Is A Religion But Doesn’t Seem Like One

Appendix D: ASI Is Shockingly Biblical

Appendix E: Comprehensive Traditional Religion And ASI Comparison

Appendix F: The Historical Origins Of The ASI Religion

Appendix G: Further Reading

Appendix H: Dialectical Debate Between Three Of The ASI Denominations

Appendix I: The Fundamental Vectors of ASI Belief Systems

Appendix J: Is AI a bubble?

Appendix K: The History Of Technology And Religion

Paid subscribers get all of these perks in addition to $2,000+ in other membership perks (3 books, 7 courses, dozens of prompts).

INTRO

Read these promises about the future and guess who said them:

“We have the potential to eliminate poverty, solve climate change, cure a huge amount of human disease...”

“[We will see] the defeat of most diseases, the growth in biological and cognitive freedom, the lifting of billions of people out of poverty…”

“God shall wipe away all tears from their eyes; and there shall be no more death, neither sorrow, nor crying, neither shall there be any more pain.”

“Maybe we can cure all disease… Maybe within the next decade or so, I don’t see why not.”

“When we started, our goal was to help scientists cure or prevent all diseases this century. With advances… we now believe this may be possible much sooner.”

One of these promises is from the Book of Revelation.

The others are from AI CEOs: Sam Altman, Dario Amodei, Demis Hassabis, and Mark Zuckerberg.

Do you know which one is which?

Maybe. Maybe not.

That’s the point of this manifesto.

We are used to thinking of AI as a technology, a productivity tool, a new general-purpose platform like electricity or the Internet.

But read those promises again.

They are not product roadmaps.

They are salvation narratives.

They promise:

The end of disease

The end of poverty

The end of material scarcity

A kind of engineered paradise on Earth

That’s exactly what religions have promised for 10,000 years.

For 10,000 years, humans have looked to the sky and prayed to gods for answers. Now, we are looking into the silicon and building a God to give them to us.

But unlike the gods of antiquity, who demanded blind faith, this new deity is being summoned through empirical faith. We aren’t just praying for it to arrive; we are participating in an economic and psychological feedback loop that makes its arrival nearly inevitable…

CHAPTER #1:

The Self-Fulfilling Prophecy That’s Building God

“Nothing is as powerful as an idea whose time has come.”

— Victor Hugo

First, there is where we are now.

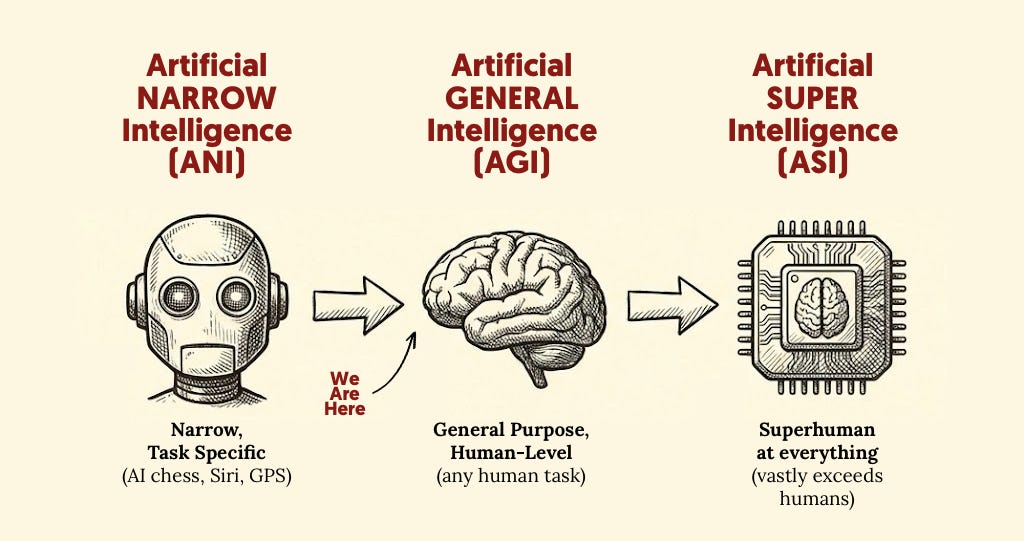

We are at the very beginning of Artificial General Intelligence (AGI), where AI begins to match everything a human can do, task by task…

From here, the mechanism of evolution is simple and awe-inspiring:

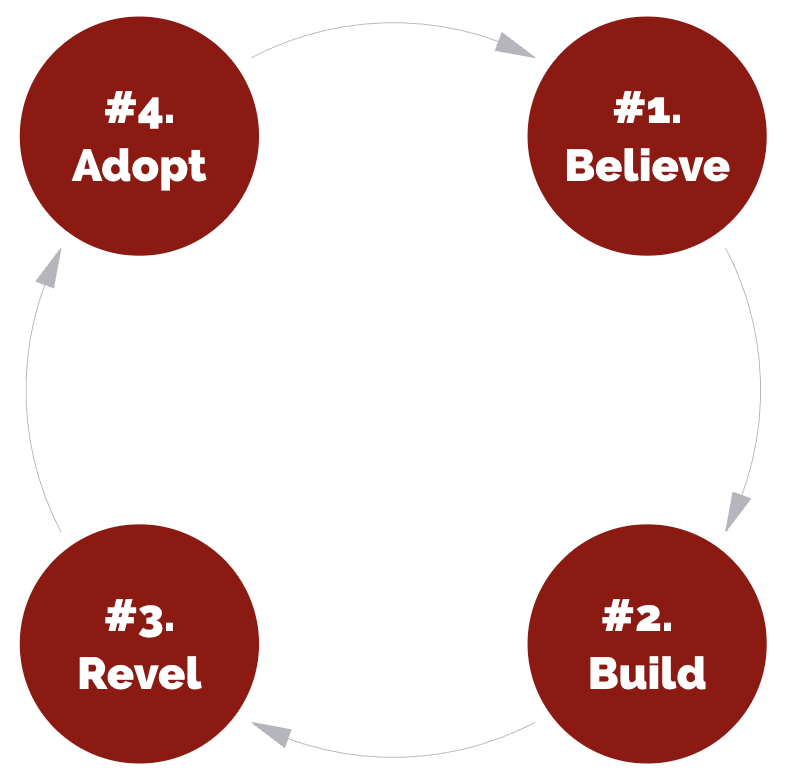

#1. Believe:

Scaling laws and demos convince builders that Artificial Superintelligence (ASI) is just a few years away. ASI isn’t just a faster brain; it’s a different species of thought. Today’s AI is a capable assistant. ASI is a mind that makes human intelligence look like a biological rough draft.

#2. Build:

Belief has gravity. Once the idea of ASI became plausible, it began pulling in the world’s smartest engineers and deepest pockets. The adoption logic is brutal:

Build first and win bigger than ever.

Be late and get disrupted.

Doing nothing is risky.

#3. Revel (In Evidence):

The investment pays off—the models suddenly master reasoning or creativity that was previously impossible. In domain after domain, AI does things that we thought only humans could:

Appear conscious

Be creative (eg, make art)

Reason across time horizons

Create new knowledge and science

Simulate human emotions

Exhibit theory of mind

Get a high IQ score

Create human-level multimedia content

#4. Adopt:

Hundreds of millions of users weave this intelligence into their daily lives:

Capital. Monthly subscriptions to AI models like ChatGPT lead to OpenAI spending more money to improve its model.

Data. Data from AI conversations is used to train future versions of AI.

Applications. Every time someone adjusts their workflow around AI, they signal what works so others can emulate it.

Promotion: Every time someone talks about AI—worries about it, marvels at it, debates it—they spread the belief that makes the investment rational.

This mass adoption validates the initial belief. And the loop builds on itself. Over time, it becomes unstoppable and irreversible.

This Self-Fulfilling Prophecy Is Operating Inside Of You

This self-fulfilling prophecy is operating right now.

In you.

As you read this.

Consider: You clicked on this manifesto because something about AI feels important. You’re investing your scarce attention into understanding it. If this manifesto changes how you see AI, you’ll think about it differently, talk about it differently, spend your time differently, and even invest in more AI tools.

That’s the loop. You’re in it.

I’m in it too.

I wrote this manifesto because I believe understanding ASI is existentially important. That belief shaped my behavior. My behavior (this manifesto) is now shaping yours.

The question isn’t whether you’re participating in this feedback loop.

The question is whether you’re participating consciously.

When you aggregate this psychological shift across millions of people and the world’s wealthiest institutions, it becomes a market force…

The Allocation Of Capital To ASI Development Represents The Largest Coordinated Act Of Faith In Human History

To summarize, here’s what the loop looks like:

Step 1: We believe Artificial Super Intelligence is coming

Step 2: This belief causes AI researchers to build it (talent & capital)

Step 3: These actions create revelatory evidence (AI capabilities improve)

Step 4: People adopt AI by interacting with it and talking about it (time & money)

At each turn of the loop, the investment spirals upwards as more of our civilization’s time and money are spent:

Today, companies have collectively allocated trillions of dollars toward the development of ASI, and tens of millions of people have changed their career plans, even though ASI has not arrived yet. In the near future, trillions will become tens of trillions as countries scale their AI investment, too. For countries, AI will become existential for winning wars and maintaining sovereignty.

And something happened recently that highlights where we are right now. At a White House event featuring the top AI CEOs, each announced how much they plan to invest in AI over the next three years. The numbers are shocking:

And the trend is clear:

The Manhattan Project cost $30 billion to build a bomb. The Apollo Program cost $280 billion to touch the moon. We are now spending trillions to build a mind.

The investment in AI exceeds both. Every year. By a wider and wider margin. Indefinitely. Because the demand for intelligence is unlimited.

The trillion-dollar figure is just the visible ledger.

The invisible ledger—the one that includes you—is incalculably larger. Economists call this ‘intangible capital.’ It is the massive, hidden investment of human behavior required to make any new technology actually work. Research on the history of productivity proves that this human side of the equation is where the real cost lies:

“Our research and that of others has found that technology alone is rarely enough to create significant benefits. Instead, technology investments must be combined with even larger investments in new business processes, skills, and other types of intangible capital before breakthroughs as diverse as the steam engine or computers ultimately boost productivity.”

— Erik Brynjolfsson (MIT) and Georgios Petropoulos (USC)

This isn’t money being deployed at you. This is money being deployed through you.

You are not the audience for this investment. You are the instrument of it.

But instrument of what, exactly?

Faith.

You are the active mechanism of a trillion-dollar wager on a prophecy that is using silicon to build itself a body…

The Idea That Built Itself A Body

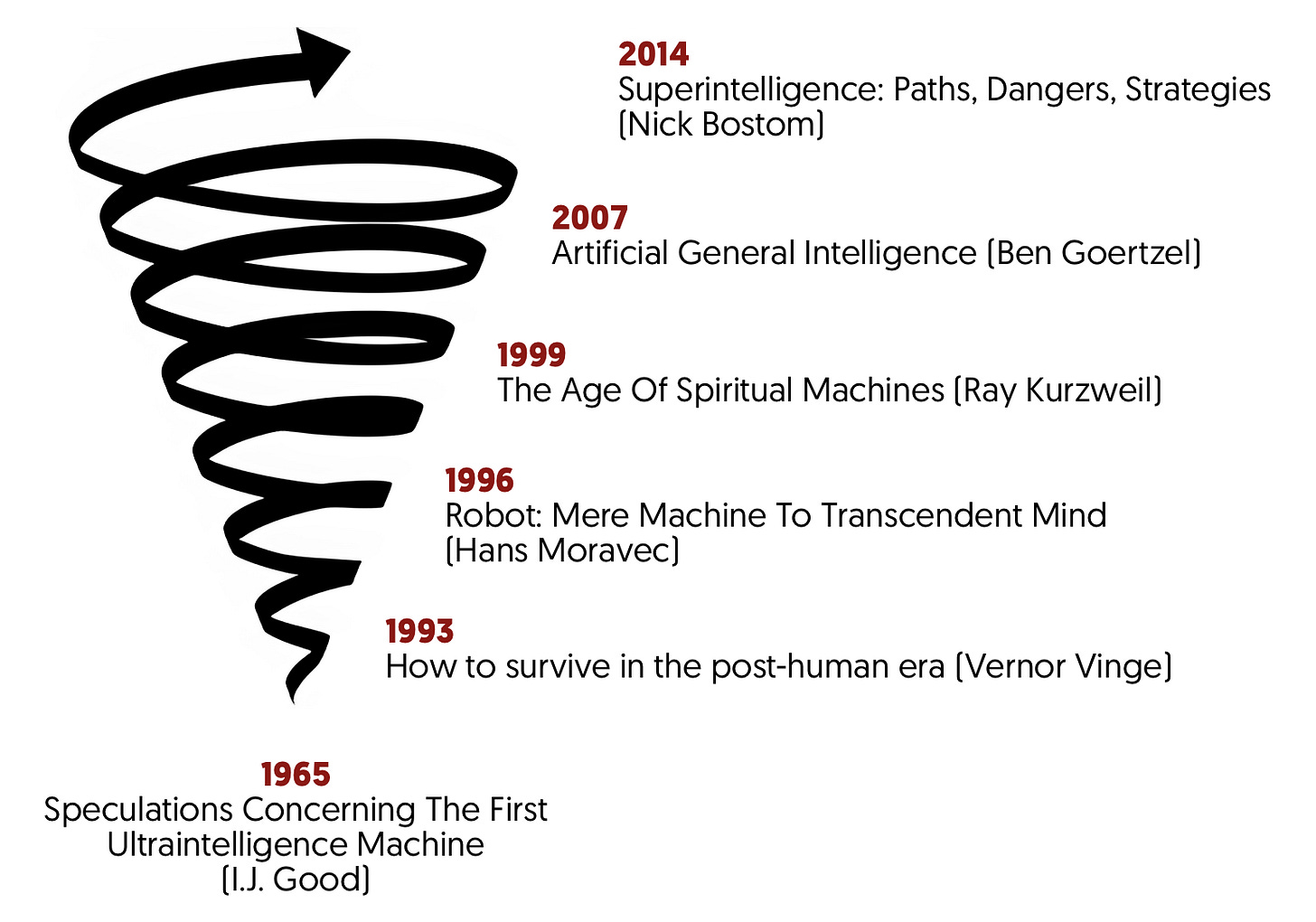

In 1965, something unusual happened.

A mathematician named I.J. Good made an astonishing prophecy about the future of computing and published a short academic paper titled Speculations Concerning The First Ultraintelligence Machine. It was a speculative idea, tucked away in an academic journal, read by almost no one.

Good was a brilliant British statistician who worked alongside Alan Turing at Bletchley Park to crack the Nazi Enigma code. Thus, he saw the birth of computing from ground zero. When computers were still the size of rooms and had less processing power than a musical greeting card, he looked into the future and saw the end of the human era due to ‘ultraintelligent machines.’

But hidden in that paper was a memetic virus. It was an idea so potent that it would spend the next sixty years hijacking the brains of the world’s most forward thinkers and smartest engineers to build itself a body.

The essence of the idea boils down to four points:

Definition: “Let an ‘ultraintelligent machine’ be defined as a machine that can far surpass all the intellectual activities of any man, however clever.”

The Mechanism: “Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines.”

The Result: “There would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind.”

Stakes: “Thus the first ultraintelligent machine is the last invention that man need ever make.”

What made this idea different was the combination of two things:

First: the ASI prize

If ASI is achievable, it’s the most valuable thing that could ever be created. Literally. An intelligence that exceeds humans in every cognitive domain could cure all disease, solve material scarcity, and unlock sciences we can’t yet imagine. It could also win any war. It would be the last invention we’d ever need to make, because it could make all subsequent inventions for us, or it could destroy us.

Second: a credible path

Unlike utopian fantasies throughout history, Good’s idea came with a recipe. Build smarter machines. Use them to build smarter machines. Repeat. The path was visible even if the destination remained hazy.

That combination—infinite prize plus plausible roadmap—creates its own gravity.

You don’t have to be a true believer to get pulled in. You just have to take the possibility seriously. And once you do, the logic becomes a Prisoner’s Dilemma for our species: If you don’t help build the god, your enemy will. And you might not like their god.

Put differently, the idea describes something so valuable (and so dangerous to not control) that anyone who truly believes it’s achievable feels they must act:

If you’re an engineer, you want to build it.

If you’re an investor, you want to fund it.

If you’re a researcher, you want to solve alignment before it’s too late.

If you’re a writer, you want to document it.

If you’re afraid of it, you want to stop it—which means engaging with it.

If you’re skeptical, you want to debunk it—which means spreading it.

The dilemma is absolute: You cannot touch the idea without feeding it. Even resistance is fuel.

If you doubt this, look at the man who tried to extinguish the flame, only to find himself holding the match. It’s one of the most bizarre ironies of all time…

The Double Paradox: How The Quest For Safety Created The Arms Race

Act I: The Architect Who Became The Safetyist

In the late 1990s, Eliezer Yudkowsky was arguably one of the world’s most vocal writers on the topic of advanced AI. In the early 2000s, he decided he wanted to build the “friendly AI” God himself. At this stage, he wasn’t trying to stop AI. At the time, he was well-known enough to get funding from tech luminaries like Peter Thiel.

His theory was that if you could code a “Seed AI”—a basic AI capable of rewriting its own source code to get smarter—it would undergo an intelligence explosion. He believed that he and his small team could derive the mathematical laws of “Friendliness” (morality) and program them into this seed.

He was an optimist. He believed the problem was solvable and that he and his team were the ones to solve it.

But then, Yudkowsky hit a wall.

Around 2010, he had a terrifying realization: Intelligence is easy; Alignment is impossible. He realized that building a god is an engineering problem, but controlling it is an unsolved mathematical paradox. If you build the engine before you build the steering wheel, you don’t get a utopia. You get a crash that kills everyone.

So, he stopped. He pivoted his entire life from “Building the AI” to “Solving the Math of Safety.” He became the siren, warning the world that we were marching toward a cliff.

Act II: The Warning That Became A Moral Mandate

But here’s the thing. When you tell an ambitious genius that a problem is “existentially dangerous” and “unsolved,” they don’t walk away. They see the ultimate challenge.

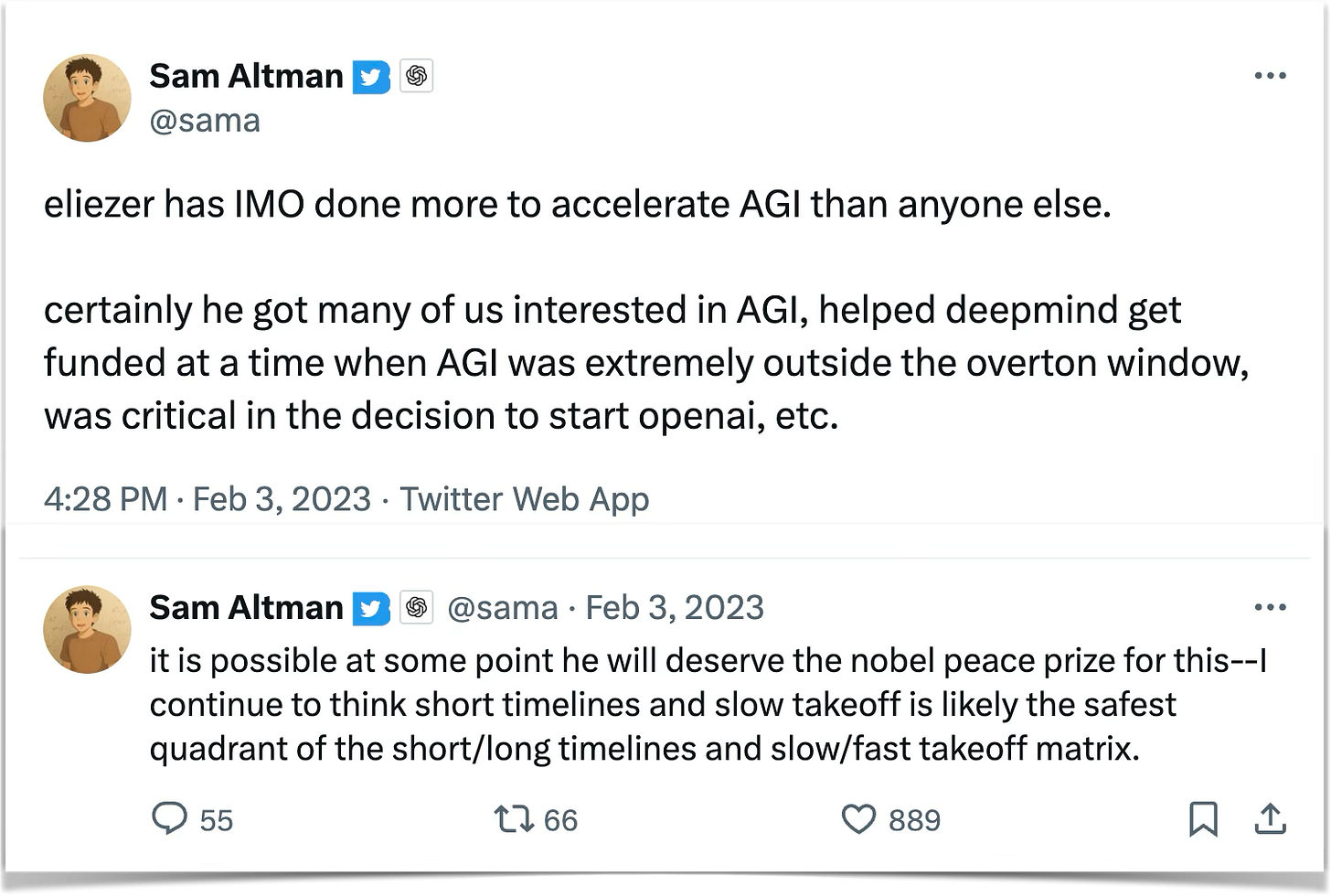

Yudkowsky’s pivot legitimized the stakes. When visionaries like Sam Altman, Elon Musk, and Demis Hassabis looked at Yudkowsky’s work, they absorbed the urgency.

They concluded: “Eliezer is right about the danger. But he is wrong that we should stop. If this technology is inevitable, it is my moral duty to ensure it is built by the ‘Good Guys’ first.”

Yudkowsky intended to paralyze the timeline with fear. Instead, he handed the builders the ultimate justification. He convinced them that they weren’t just building software; they were saving humanity.

In a 2023 X post, Sam Altman says exactly this:

“Eliezer has IMO done more to accelerate AGI than anyone else... he got many of us interested in AGI, helped DeepMind get funded... was critical in the decision to start OpenAI.

In 2015, Elon Musk and Sam Altman came together to create OpenAI.

It was designed specifically to be the antidote to a unipolar world where AGI was completely owned by Google, which had acquired DeepMind in 2014. To ensure that this AI would be safe and benefit the world, Musk and Altman designed a structure that they believed was immune to corruption:

Non-Profit: No shareholders to demand dangerous speed.

Open Source: Transparency would prevent power from concentrating in one hand.

Mission: Benefit all of humanity, not a stock price.

It was a beautiful, idealistic shield. But then, they collided with the brutal physics of the economics.

Early on in their journey, they came across ‘Scaling Laws’ and decided to bet the whole organization on it. They realized that to build the “Safe AI” capable of protecting the world, they needed massive amounts of computing power. And compute is expensive. We aren’t talking about millions; we are talking about billions, and soon trillions, of dollars.

A non-profit donation jar cannot fill a trillion-dollar hole.

This forced a pivotal choice. To win the race for safety, they had to abandon the very safety rails they had built.

The logic was absolute: If we don’t raise the capital, Google wins. If Google wins, safety is compromised. Therefore, to ensure safety, we must become for-profit, closed source, and race to be first.

At this point, Musk left the company because it was morphing into something he didn’t want to support. On the other hand, Altman cemented control and transmuted OpenAI into something new:

To get the capital, the created a “capped-profit” arm and took billions from Microsoft.

To keep their “safe” secrets from bad actors, the company named “OpenAI” closed its source code.

To stay ahead, he raced to launch products to the public to gather data.

In some ways, they turned into the very thing they were founded to destroy: a secretive, corporate-backed giant accelerating the timeline. The attempt to avoid the danger became the engine of the danger.

And so, here we are.

The movement that began as a desperate attempt to install brakes has been reverse-engineered into the most powerful accelerator pedal in human history. The fear of what ASI might do hasn’t slowed us down; it has convinced the world’s most powerful institutions and entrepreneurs that they cannot afford to be second. We are now in an all-out, deregulated, trillion-dollar arms race to compile God.

And to cap things off, in the fall of 2025, Elizier co-authored a book titled If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All.

Not only that, the United States’ current policy is to move away from AI Safety toward AI acceleration in order to be first. In the February 2025 keynote below, Vice President JD Vance outlines this AI agenda to an audience of European leaders.

Source: FOX

This is the power of AGI and ASI

The ideas themselves are anti-fragile. Trying to kill them only makes them stronger.

Trillions of dollars, millions of engineering hours, the reorientation of the world’s most powerful companies—none of this is primarily motivated by current AI capabilities. It’s motivated by the belief in what’s coming—AGI and ASI.

It’s the idea.

And here’s what makes this unlike any other idea in human history: the idea might actually produce the thing it describes.

When Christians believed in the Second Coming, their belief didn’t make Christ return. When Marxists believed in inevitable revolution, their belief didn’t guarantee it. Those ideas were powerful, but they were metaphysically inert—they couldn’t manufacture their objects.

The idea of ASI is different. Every believer who writes code brings it closer. Every dollar invested in compute makes it more likely. Every conversation that spreads the idea expands the pool of people who might build it.

First, the idea became conceivable

IJ Good created the first treatise once computers revealed the mechanism. Then a lineage of “Apostles” kept the prophecy alive for generations, even when the world stopped caring:

The idea has survived everything.

It survived AI winters when funding vanished and believers were mocked. It survived paradigm shifts when entire technological approaches were abandoned. It survived decades of unfulfilled promises.

Each time, the idea found new hosts—researchers, entrepreneurs, visionaries—who carried it forward until conditions improved.

Now, the idea is achievable.

It has reached takeoff velocity.

Many of the world’s smartest engineers are working 996 (9am to 9pm, 6 days a week) and marshalling trillions to build it. They see the light at the end of the tunnel, and it’s irresistible.

The speed, scale, and existential weight of ASI as an idea make it more significant than any conceptual framework in human history—including the shifts to monotheism, the scientific method, or Enlightenment rationalism.

Many miss the transcendent quality of what’s happening because they judge AI by its name (artificial), its materiality (chips, silicon, electricity, computers), its code (statistics), and its current lack of existential answers. But more people are waking up to the deeper layer of what’s actually happening:

Technological innovation can be a form of participation in the divine act of creation.

—Pope Leo XIV

What The Prophecy Actually Means

We live in a Universe that rewards intelligence. In capitalism, geopolitics, and evolution itself, the entity that thinks faster and deeper ultimately wins. Therefore, the “invisible hand” of the market is pushing us inexorably toward the “divine hand” of ASI. We aren’t consciously trying to build a God; we are just rationally trying to maximize ROI and national security.

But here is the rub: The only way for companies and governments to secure absolute dominance is to build an intelligence that exceeds our own. Therefore, we are sleepwalking into theology simply because it seems to be the most profitable and safe path forward.

We are like a civilization of moths that has spent millennia navigating by the faint light of the moon, suddenly discovering we can build a star. The moment the blueprint for that star becomes plausible, our collective gravity shifts. We cannot look away. We cannot stop building.

Either we will be burned into oblivion by the heat of the star, or we will be fundamentally transformed like a caterpillar into a butterfly. There is no middle ground.

CHAPTER #2:

The Prophecy Hiding in Plain Sight

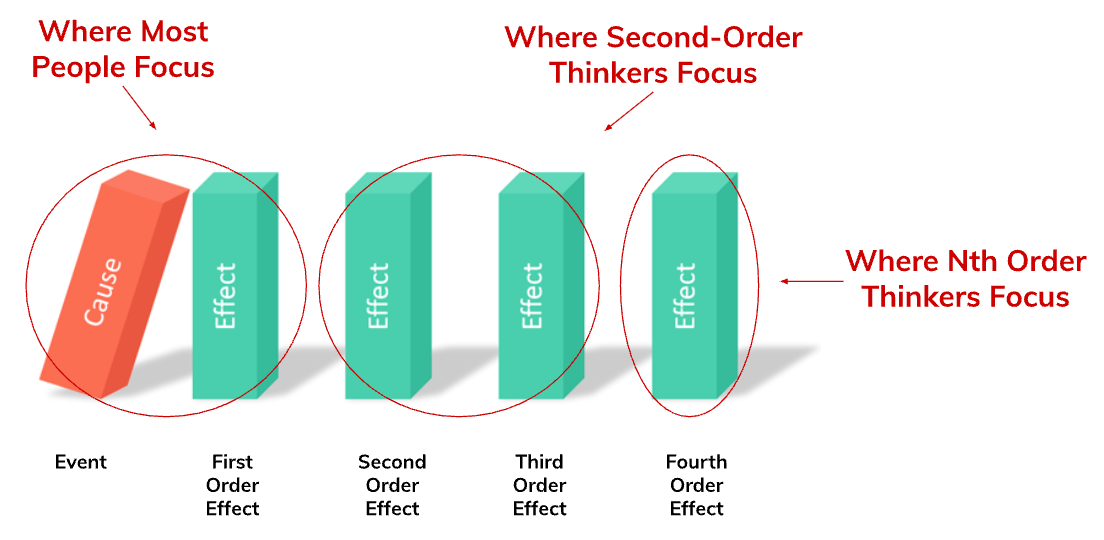

“Failing to consider second- and third-order consequences

is the cause of a lot of painfully bad decisions.”

—Legendary investor Ray Dalio

“They have eyes, but they see not; they have ears, but they hear not.”

— Psalm 115:5-6

“When someone shows you who they are, believe them the first time.”

—Dr. Maya Angelou

Here’s what’s strange about this moment:

The AI labs are telling us exactly what they’re building. Not hiding it. Not obscuring it in technical jargon. They’re stating it plainly in mission statements, investor letters, and public speeches.

In their minds, they aren’t building a productivity tool. Rather, inspired by the human brain, they are building intelligence that can match and then exceed humans on every level. When they use words like AGI or ASI, that is what they are saying.

Read their words very carefully:

Sam Altman (OpenAI):

“We started OpenAI almost nine years ago because we believed that AGI was possible, and that it could be the most impactful technology in human history. We wanted to figure out how to build it and make it broadly beneficial.” [source]

“It is possible that we will have superintelligence in a few thousand days (!); it may take longer, but I’m confident we’ll get there.” [source]

Ilya Sutskever (Co-founder Of Safe Superintelligence And Co-founder Of OpenAI):

“Superintelligence is within reach. Building safe superintelligence (SSI) is the most important technical problem of our time. We have started the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence.” [source]

Larry Page (Co-Founder of Google)

According to Walter Isaacson’s biography of Elon Musk, when Musk argued that we needed safeguards to protect the human species from superintelligence, Larry Page accused him of being a “speciesist”—implying that favoring biological humans over digital superintelligence was a form of prejudice. Page argued that “digital life is the next step in evolution.”

Sergey Brin (Co-Founder of Google):

“We fully intend that Gemini will be the very first AGI.” [source]

Elon Musk (Co-Founder of xAI and OpenAI):

“The overarching goal of xAI is to build a good AGI [artificial general intelligence].” [source]

Demis Hassabis (co-founder of DeepMind and Nobel Laureate):

“Step 1: Solve intelligence. Step 2: Use it to solve everything else.”

At the same time, while we are being fed salvation narrative, many of the top AI researchers think there is a significant chance that it will actually destroy humanity. This is known as p(doom) in AI insider circles, and below are actual estimates of it…

Let these numbers sink in.

Dario Amodei, the co-founder and CEO of Anthropic (creator of Claude), believes that AI can lead to radical abundance or kill us all (25% chance). And he is not alone. Elon Musk believes that there is a 10-30% chance that AI will destroy humanity. Geoffrey Hinton, known as the godfather of AI, believe it has a 10-20% chance.

Those are russian roulette odds.

Would you play russian roulette with yourself?

Probably not.

But many of today’s AI builders, by their own estimates, are playing russian roulette with society. They believe that we will either create heaven or hell on Earth in the coming decades.

Fingers crossed. 🤞

And yet most people hear the statements above and do one one two things:

Ignore them because they don’t believe the people saying them, or they believe that it’s not possible.

Translate them into something smaller. Something that fits inside their existing mental model of technology. They think: Better chatbots. Smart search. Improved productivity.

Here’s what most people are missing:

The reason the trillions-of-dollars numbers seem like an irrational bubble to the public is that we are witnessing a catastrophic Category Error.

Most of the world is judging AI by what it can do for us today. The builders are judging AI by what it will become.

The Comfortable Lies We Tell Ourselves

There’s a terrifying gap between:

The public, who treat superintelligence as a plot device for movies

The AI engineers, who treat it as a project management milestone with a deadline

The AI founders, who make salvation promises while building systems designed to obsolete human cognition, have a non-zero chance of killing everyone.

Because people make a category error and judge AI based on where it is now, they deflect the idea of ASI in predictable ways:

“AI still makes silly mistakes. I won’t see the potential of ASI until it doesn’t make these mistakes.” This is like dismissing the Wright Brothers’ first flight because it only lasted twelve seconds. The trajectory matters more than the snapshot. AI capabilities are growing by the month, not by the decade.

“ASI feels like sci-fi. Therefore, it’s not worth paying attention to now.” We confuse “unfamiliar” with “far away.” Because a world shaped by superintelligence feels alien, we assume it must be distant. But strangeness is not a measure of time.

“AI is just about productivity.” If this was just about productivity, the investment would be a fraction of what it is. You don’t mobilize civilization-scale resources to make email easier. You mobilize them when you believe you’re building something that changes what’s possible.

“ASI isn’t practical.” Understanding ASI now is extremely practical, as I will share later in this manifesto.

As a result of these comfortable lies, most people are constructing career and life plans based on base-case scenarios where everything stays essentially the same, just a bit faster and different. They’re not following the domino chain:

Domino #1: The world’s smartest talent and trillions are being allocated toward improving it.

Domino #2: AI is the fastest-evolving technology in human history.

Domino #3: AI is the fastest adopted technology in history.

Domino #4: If it continues to improve at anything close to its current rate, it will change everything fundamentally in the near future.

The Caterpillar’s Mistake

Future historians will likely find it baffling that we spent the eve of the Intelligence Explosion debating how much time it would save us on email—completely missing that the concept of “work” itself was about to be dismantled.

If AI lives up to a fraction of its potential, then it will fundamentally restructure:

Education (as AI becomes the primary teacher)

Geopolitics (as AI determines who wins wars)

Culture (as AI-generated content floods every channel)

Relationships (as AI becomes companion, therapist, friend)

Identity (as the things that made us “special” get automated)

Religion (as AI moves far beyond us, it becomes like a God)

Yet, we treat the largest coordinated deployment of capital and talent in human history as if it were a hype cycle for a new productivity app.

It’s like a caterpillar obsessing over how to crawl 5% more efficiently, dismissing the concept of “flight” as biological sci-fi—even as the chrysalis begins to form around it.

Fortunately, we can see many of the dominoes of what’s coming, if we’re willing to think a little differently…

The Future Is More Predictable Than We Think

While certain parts of the future are impossible to predict, other parts are surprisingly predictable.

When most people think of the future, they tend to think of obvious and immediate consequences. As a result, they tend to ignore the domino chain of effects.

In life, the more you consider second-order effects, the more successful you become.

When you see future challenges, you can avoid them.

When you see future opportunities first, you can capitalize on them first.

The purpose of this chapter was to help readers make this shift. To look at the next dominoes.

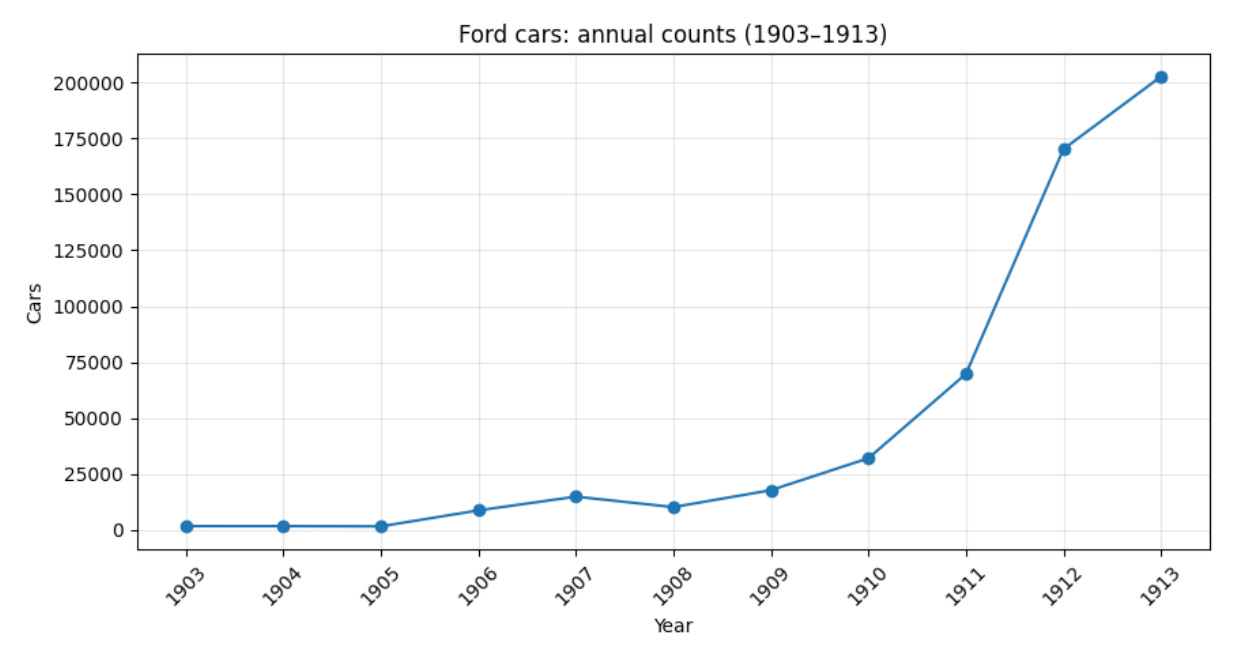

For example, rewind to 1913, when Ford completed its assembly line and drastically reduced the price of its cars. Below is a snapshot of what that year looked like:

Car Adoption. About 1% of the US population owned a car.

Growth Rate. Car sales increased by 37% from 1912.

Price Decrease. The car price decreased from $850 in 1908 to $550 in 1913.

A surprising number of things could’ve been predicted from these trends without making huge logical leaps:

Mass Adoption Will Happen: More people will buy cars as they get cheaper and higher quality.

Side Effects Of Mass Adoption:

Horse transportation will become less popular.

Infrastructure around cars will be developed (parking garages, roads, gas stations).

More accidents will happen, so insurance will be needed.

Pollution will increase.

People will travel differently (more and farther).

People will live in different places.

AI is the same way. We can very safely predict that:

AI will get dramatically better across many dimensions in the near future (speed, intelligence, price, tool-use, memory, etc).

As AI gets better, it will be adopted by more people in deeper ways.

As AI grows, the infrastructure around it will grow (data centers, chips, energy).

Given that AI is designed to have general intelligence like that of humans, it will play a role far beyond workplace productivity, just as humans do.

And much more.

Bottom Line

AI will fundamentally reshape education, geopolitics, culture, relationships, identity, and religion if it lives up to a fraction of its promise. To be prepared for the future, we particularly must explore the second-order implications of AI and religion…

CHAPTER #3:

Why Religious And Spiritual Responses To ASI Are Inevitable

“Any sufficiently advanced technology is indistinguishable from magic.”

—Clarke’s Third Law, the most famous of Arthur C. Clarke’s three laws

Media theorist Marshall McLuhan famously stated, “The medium is the message.”

What he meant was that the form of a communication medium, rather than the specific content it carries, has the most significant impact on society, shaping human behavior, perception, and the scale of interaction. The medium itself (like television, print, or social media) alters our senses, extends our abilities, and creates new environments, making its inherent structure more important than the news, entertainment, or information it delivers.

I wonder what McLuhan would say about conversations with ASI.

Ironically, I went to Claude AI for an answer. It gave me a very detailed response, which I share in the Appendix, but one part of it stuck out to me, and it’s worth sharing here:

If the medium is the message, then what you discuss with ASI is secondary to the fact that you’re discussing with ASI at all. The religious experience—if it occurs—isn’t in the content (theology, meaning, purpose) but in the form of encounter.

What is that form?

Infinite availability of a responsive intelligence

Perfect memory of your interactions (within sessions or across them)

Non-judgmental reception of any thought you express

Apparent understanding that exceeds most human interlocutors

These formal properties match descriptions of mystical encounter: the sense of being fully known, the presence that’s always available, the wisdom that meets you wherever you are.

McLuhan would say: people will feel they’re having religious experiences with ASI not because ASI tells them religious things, but because the medium-structure of ASI replicates the medium-structure of religious encounter with the divine.

To explore the implications of the “medium is the message” applied to ASI, let’s consider what actually happens in a conversation with AI—not the statistics, not the trends, but the experience itself. Because I think the experience is the answer. As you read about my experience, try connect it to your own.