Top AI Experts Predict Artificial General Intelligence In 3-5 Years. Now What?

Personal Update: Artificial General Intelligence (AGI) will be the most important technological innovation in human history. It is happening in a few years, and it will change everything for everyone.

Against all odds, we happen to be alive to witness this historic moment.

When I was in high school in the late 1990s, I remember reading books like Profiles of the Future: An Inquiry into the Limits of the Possible by author Arthur C Clarke and The Age of Spiritual Machines: When Computers Exceed Human Intelligence by futurist Ray Kurzweil. These books painted wondrous pictures of possibilities that exploded my adolescent mind.

At the same time, I hated the idea that I had to wait decades for these innovations.

Well, sci-fi is now a reality.

And, as the saying goes, be careful what you wish for. The only thing that we can confidently say about the future is that it will be incredibly tumultuous. Every person will need to become a super adapter in order to keep up with the radical change.

Frankly, I’m still processing what I think about it, and this article is me learning and thinking out loud. I offer more questions than answers.

On January 4, 2021, Sam Altman, the CEO of OpenAI, the leading Artificial General Intelligence (AGI) company, tweeted the following question…

If an oracle told you that human-level AGI was coming in 10 years, what about your life would you do differently?

It’s now three years later and AGI has advanced faster than almost anyone— even the top people in AI—expected.

For example, in a November 29, 2023 interview, Elon Musk gave a three-year timeline for when AI will surpass the smartest human:

ELON: If you say “smarter than the smartest human at anything”? It may not quite be smarter than all humans - or machine-augmented humans, because, you know, we have computers and stuff, so there’s a higher bar… but if you mean, it can write a novel as good as JK Rowling, or discover new physics, invent new technology? I would say we are less than three years from that point.

Why should we care what Musk thinks? Especially when his previous predictions about the release of Tesla’s full self-driving have been off?

Here are a few reasons…

He co-founded OpenAI (the leading AGI company in the world).

He has been way ahead of the curve on AI progress (and the importance of AI safety).

He is the founder of an AGI company (Grok).

Tesla is the leading company in the world building AI that navigates the physical world.

Although the timelines of his predictions have been off, the actual predictions of milestones have never been off.

He may be the most successful innovator who has ever lived.

More so, Musk’s prediction is not an outlier. It represents the average range of what most of the leading AI experts predict.

For example, at another recent conference, Sam Altman said that in 2024, OpenAI will release an AI that is even better than people are expecting. This is a big deal when you consider that the expectations of GPT-5 are already astronomical.

Source: APEC Summit, Curator: AISafetyMemes

INTERVIEWER: What is the most remarkable surprise you expect to happen in 2024?

SAM ALTMAN: The model capability will have taken such a leap forward that no one expected. The model capability—like what these systems can do—will have taken such a leap forward... no one expected that much progress. [It will be] just different from expectations. I think people have, like, in their mind how much better the models will be next year, and it'll be remarkable how much different it is.

To further give credence that he wasn’t exaggerating, he recently tweeted:

4 times in the history of OpenAI––the most recent time was in the last couple of weeks––I’ve gotten to be in the room when we push the veil of ignorance back and the frontier of discovery forward. Getting to do that is the professional honor of a lifetime.

—Sam Altman

Again, Musk and Altman aren’t alone.

The consensus among AI experts is that:

AI will be smarter than even the smartest humans within 5 years.

It will become more and more autonomous until it has the ability to operate independently in the world to do things like starting companies, forming relationships, launching products, investing, etc. without human involvement.

It will self-improve rapidly.

Let these predictions sink in.

Picture everything you do at work. Now, imagine AI being able to do it better and faster than you by November 2026.

It’s like we can see an alien species rapidly approaching, and we estimate that it will arrive soon. We know it will change life as we know it. And it begs the question, what should we as average human beings do to prepare for this arrival?

Or stated differently, the next decade or two may be equivalent to thousands of years of change relative to today’s rate of change. How do we fathom that amount of change and plan our lives around it?

Bottom line: AGI is likely to arrive in 3-5 years. What should we do about it?

The Sam Altman Question is challenging because it causes all of our mental models for reasoning about the future to fail.

This makes me feel a certain way…

Part Of Me Wants To Look Away From The Sam Altman Question

For my whole life, I have been passionate about technological progress and I always wished it was happening faster.

This is the first time where I actually want things to go slower.

I have incredible faith in the ability of humanity to adapt. I mean, here I am typing an article on a computer that will be transported instantaneously across the planet. The fact that I can do this with the same DNA as my hunter-gatherer ancestors is astounding. But, I’m just not sure that we can individually and culturally adapt as fast as we need to.

If Covid was the 100x easier dress rehearsal before the big performance, then my concerns are well placed.

For me personally, when GPT-4 came out in March 2023, and I realized how close we were to AGI, I actually went through the famous five stages of grief multiple times…

Then, for several months, I went back to my life as normal while using GPT-4 like it was just any other tool. In retrospect, my mind didn’t know how to deeply reflect on the implications of near-term AGI, so I just flinched away.

But, that changed with many of the recent AI announcements and breakthroughs…

6 Reasons Why I Can No Longer Ignore The World-Changing Implications Of Near-Term AGI

To explain what changed for me, it’s critical to understand Artificial Superintelligence (ASI).

ASI was named and defined by Oxford philosopher and leading AI thinker Nick Bostrom all the way back in 1997. He defined it as:

ASI Definition: “An intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills.”

In 2015, Tim Urban translated the core thesis of Bostrom’s 2014 book, Superintelligence, into a must-read long-form article called The AI Revolution: The Road to Superintelligence. This article is still relevant today.

My favorite part of the article is how Urban visually explains the logic behind the arrival of ASI. Here is a summary of the big points along with a few newer points and a deeper dive into each:

We underestimate AI because we only judge it by its current intelligence.

We underestimate AI’s speed of improvement because we are not good at understanding steep exponential growth.

We simply can’t fathom intelligence beyond our own.

AI capability will expand seemingly overnight when AI can improve itself.

We seemingly have a clear path to AGI without any major obstacles.

AI will think and move 1,000x faster than us.

#1. We under-estimate AI because we only judge it by its current intelligence

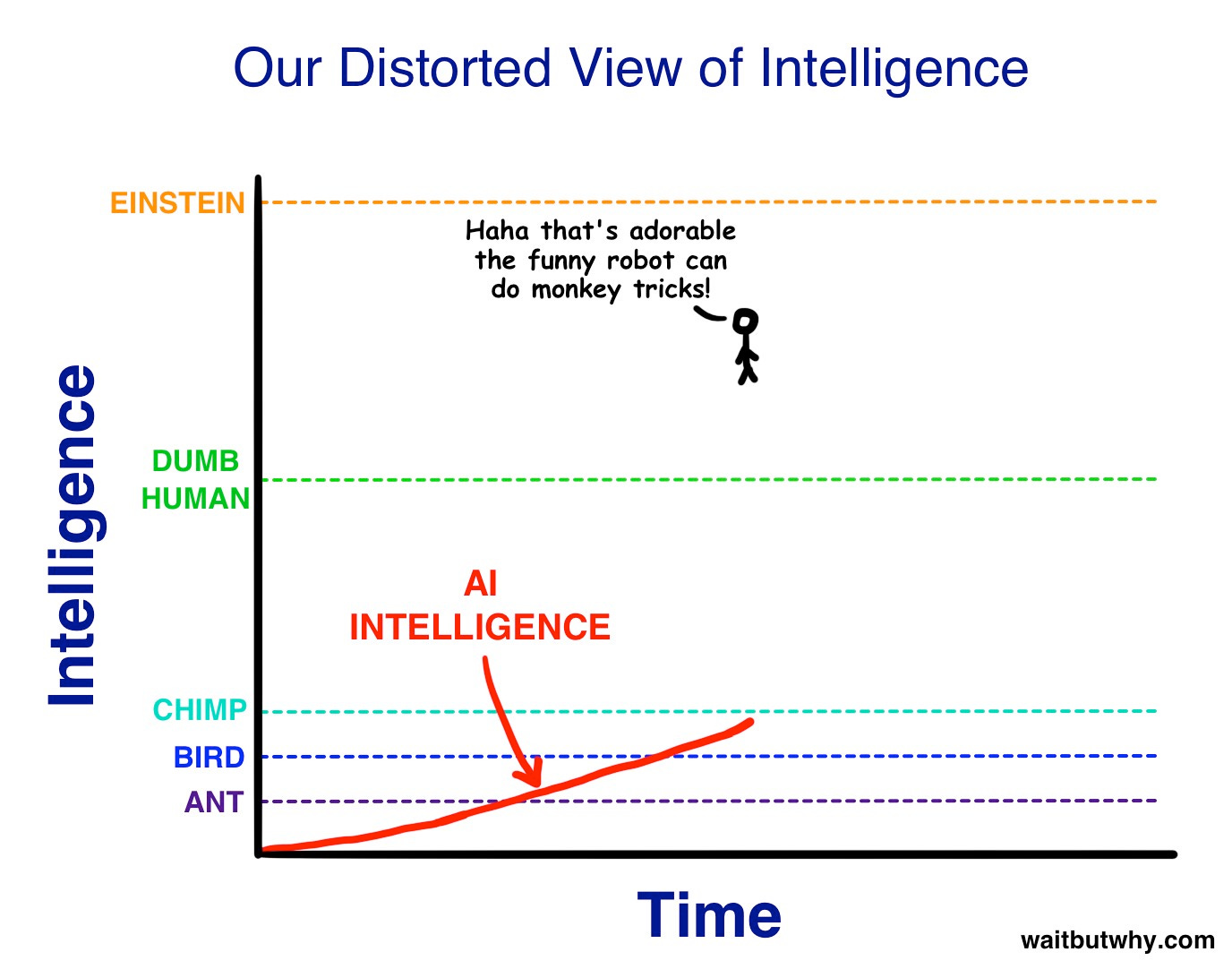

In this phase, it’s easy to ignore AI even though it’s close because it’s still below us from an overall intelligence perspective. The image below shows where we still roughly are, except AI is now above the green line:

#2. We under-estimate AI’s speed of improvement, because we are not good at understanding steep exponential growth

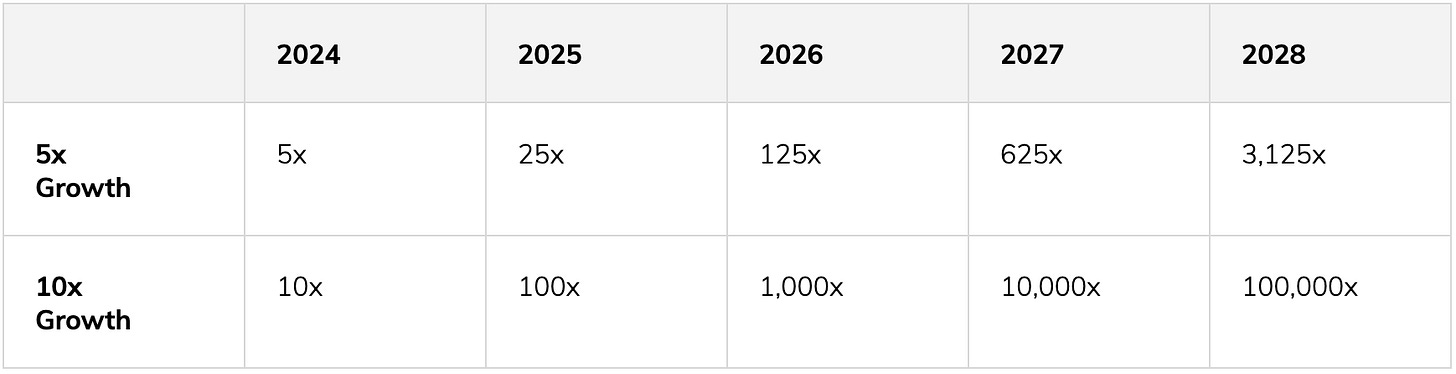

In an October 2023 interview with UK Prime Minister, Elon Musk shares the following growth rate for AI capability that he’s observing:

Source: Rishi Sunak & Elon Musk: Talk AI, Tech & the Future (October 2023)

The pace of AI is faster than any technology I've seen in history by far, and it seems to be growing in capability by at least 5-fold, perhaps 10-fold, per year.

—Elon Musk

Extrapolating Musk’s statement by five years, we’d get between 3,125x-100,000x the AI capability we have now by 2028.

Musk is not alone in noticing the high rate of change.

On the Ezra Klein podcast earlier in the year, Sam Altman also shared the 10x number:

Two quotes from the clip stick out:

Moore's Law is a doubling of transistors every two years. AI is growing at a rate of 10x per year in terms of model sizes and associated capabilities.

—Sam Altman (paraphrased)

When you're on an exponential curve, you should generally, in my opinion, take the assumption that it's going to keep going.

—Sam Altman

Tim Sweeney, founder of Epic Games, one of the largest gaming companies in the world said something similar about AI's growth trajectory:

Artificial intelligence is doubling at a rate much faster than Moore’s Law’s 2 years, or evolutionary biology’s 2M years. Why? Because we’re bootstrapping it on the back of both laws. And if it can feed back into its own acceleration, that’s a stacked exponential.

—Tim Sweeney

These quotes caught my attention for two reasons:

Moore's Law is the reason why a $100 smartphone is more powerful than any computer from 1965.

Something growing 10x per year would mean going from computers that fill up rooms to smartphones in a few years rather than a few decades.

This all is a big deal because we humans have trouble thinking about exponential curves in the first place. We’re way worse with exponentials that have such a steep growth curve.

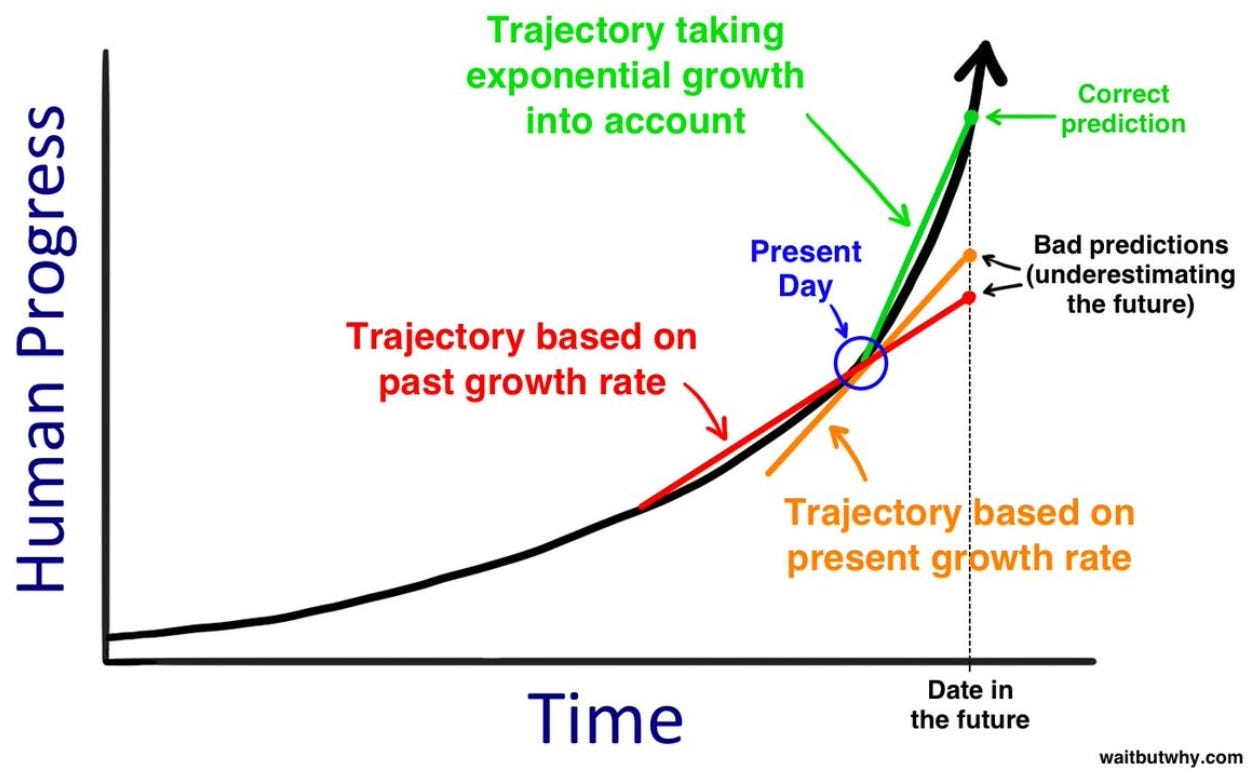

Tim Urban uses the following visual to demonstrate this bias we have:

He also uses the graph below to capture how extrapolating based on exponential growth curves is tricky:

In other words, we’re used to thinking in a linear world. Therefore, we extrapolate based on a linear slope rather than an exponential one.

Bottom line: We have trouble understanding exponentials because we’re just not used to anything in our environment growing at that rate for so long.

#3. We simply can’t fathom intelligence beyond our own

To illustrate that there are many levels above human intelligence, which are impossible for us to fathom, Urban uses the following intelligence stairway:

This image is important on two levels.

First, it’s impossible for us to comprehend advanced intelligence beyond our own.

More specifically, it’s hard to comprehend in the same way it would be impossible for an ant to understand human intelligence.

Or even consider how difficult it is for teenagers to understand that their parents have wisdom that they may not be able to understand. Not only that, teenagers often think they are smarter than their parents. As the father of a 15-year-old, maybe I’m just venting here. :)

AI safety researcher Eliezer Yudkowsky uses several examples to show how it’s hard for us to understand advanced intelligence:

Source: Logan Bartlett Show

Second, there are way higher levels of intelligence above us.

Although we think we are so much smarter than other animals, we’re actually much closer to their intelligence than we are to much higher forms of intelligence that could be created.

This means that once a machine intelligence goes past human intelligence, making significant leaps beyond it won’t be as hard as the average person would think.

Another way of saying the same thing is that…

Humans are as dumb as it’s possible to be and still build a computer.

—Eliezer Yudkowsky

The video that helped me see just how close humans are in intelligence to primates is the one below. There is something shocking about watching a monkey easily and perfectly do a memory task that the smartest humans would struggle to do if they even could.

Monkeys actually have significantly higher working memory capacity than humans.

#4. AI capability will expand seemingly overnight when AI can improve itself

To illustrate how quickly intelligence could improve, Tim Urban uses the following chart:

The reason that the chart shoots straight up is that AI begins to improve itself without humans. While this sounded crazy the first time that I heard it, it now almost feels inevitable for a few reasons:

AI is creating synthetic data. Current AGI was primarily trained on data by surfing the web. Newer models are being pre-trained on synthetic data, which is “information that's artificially generated rather than produced by real-world events. Typically created using algorithms, synthetic data can be deployed to validate mathematical models and to train machine learning models. [Wikipedia]”.

AI is programming everything already. 41% of code on the largest code repository in the world is written by AI.

AI is training AI. AI is increasingly being used to train new AI rather than just humans filling this role.

AI is being used to automate computer chip design, manufacturing, and data centers. More and more automation is being used at every level of the hardware stack.

In other words, AI is slowly taking the driver’s wheel at each step of the process that would be necessary to rapidly improve itself.

The most recent example of this is a short clip where the founder and CEO of Nvidia, the largest AI chip manufacturer, Jensen Huang shares that AI is taking a larger and larger role in making chips:

Original Source: New York Times DealBook Summit, Curator: AI Safety Memes

Resource: Learn more about recent advancements in AI recursive self-improvement.

#5. We seemingly have a clear path to AGI without any major obstacles

In the past, I thought AI progress was like climbing a mountain.

When we’re climbing a mountain, the only thing we can see is the peak of the mountain we’re on. However, once we reach the peak, we can suddenly see even higher peaks to surmount.

Over the last five decades, the AI field had gotten excited by the mountain they were climbing and predicted rapid progress. Each time, they eventually realized that there were much higher peaks that would take decades to climb.

I thought this time was similar.

For example, in Why AI is Harder Than We Think, academic AI researcher Melanie Mitchell explains how the field has historically over-predicted progress:

The philosopher Hubert Dreyfus called this a “first-step fallacy.” As Dreyfus characterized it, “The first-step fallacy is the claim that, ever since our first work on computer intelligence we have been inching along a continuum at the end of which is AI so that any improvement in our programs no matter how trivial counts as progress.” Dreyfus quotes an analogy made by his brother, the engineer Stuart Dreyfus: “It was like claiming that the first monkey that climbed a tree was making progress towards landing on the moon.”

As an example of this, starting in 2016, Elon Musk predicted fully autonomous driving. Then, he kept on predicting it in the next year. It’s 2023 and Musk still thinks it’s imminent.

But, it seems like we may be on the final mountain.

For example, the video below from Jared Kaplan, the co-founder of Anthropic, one of the largest AGI companies, explains the shift:

Source: Stanford

Shane Legg, the co-founder of Deep Mind, the division in Google with the largest AI breakthroughs, agrees:

Source: Dwarkesh Podcast

In other words, AI has been scaling in a surprisingly predictable way. And while there are many innovations that need to happen to reach ASI, they are likely to be solved quickly.

#6. AI will think and move 1,000x faster than us

One podcast interview that got me to realize what ASI might be like was with theoretical physicist David Deutsch and neuroscientist Sam Harris on a fascinating Making Sense podcast episode.

Below is the relevant part of the transcript that still sticks with me 8 years later:

Sam Harris: Just consider the relative speed of processing of our brains and those of our new, artificial teenagers. If we have teenagers who are thinking a million times faster than we are, even at the same level of intelligence, then every time we let them scheme for a week, they will have actually schemed for 20,000 years of parent time.

I didn’t think about this interview again until I saw the following tweet from Elon Musk a year later:

In a few years, that bot will move so fast you’ll need a strobe light to see it.

Again, the idea stuck with me.

More recently, the idea finally made sense when I saw three videos…

First is a 19-minute video clip from the Lex Fridman podcast.

In this clip, Eliezer Yudkowsky, one of the most well-known researchers, writers, and philosophers on the topic of superintelligent AI, provides a thought experiment to more deeply understand what it would be like to be a human interacting with a species that is just as smart as you, but much slower.

If you’re in a hurry, I’d recommend that you watch the other two clips and come back to this one later.

Source: Eliezer Yudkowsky: Dangers of AI and the End of Human Civilization | Lex Fridman Podcast #368

Second is this footage of a drone accelerating from zero to 200 kilometers per hour in less than a second.

Source: AI Safety Memes

Third is this clip of how we might appear like statues to AI both cognitively and physically if it was 50x faster than us:

Curator: AI Safety Memes, Source: Adam Magyar

Berkeley AI research Andrew Critch breaks down why AI will be so much faster in a tweet:

Over the next decade, expect AI with more like a 100x - 1,000,000x speed advantage over us.

Why?

Neurons fire at ~1000 times/second at most, while computer chips "fire" a million times faster than that. Current AI has not been distilled to run maximally efficiently, but will almost certainly run 100x faster than humans, and 1,000,000x is conceivable given the hardware speed difference.

Bottom line: Although there may be disagreement on how quickly AI arrives and how quickly it takes off into superintelligence, there is no doubt that we are moving rapidly in that direction in the next decade.

Despite how big this news is, it’s hard to metabolize. As Sam Altman said in the Ezra Klein podcast, it feels like the calm before the storm in February 2020, when we heard of Covid spreading exponentially around the world.

The Sam Altman question still looms for me. What do we as average people do now?

The Sam Altman Question Flummoxes Me

I want to live happily in a world I don’t understand.

—Nassim Taleb

I’ll be frank and put all of my cards on the table.

I’m not sure what to think, feel, or do about the situation.

Currently, I vacillate between the following four possibilities…

Wait and adapt.

Go all in on using AI to do what I currently do better.

Pivot to AI Safety.

Rethink my whole from first principles.

#1. Wait and adapt

In some moments, I don’t want to change anything. I just want to focus on what I’m doing right now, keep up-to-date with AI, and then adapt as new versions of ChatGPT are released.

My logic for this approach is a few-fold:

Future versions of ChatGPT will require much less prompting.

The quality and number of AI tools are exploding.

Therefore, if I turn everything I am doing right now into prompts and tools, it will likely become obsolete with new versions of ChatGPT.

This approach is inspired by the logic of self-made billionaire investor Howard Marks whose mantra is this...

You can’t predict, you can prepare.

—Howard Marks

Marks believes that succeeding as an investor by making predictions of exactly what’s going to occur and getting the timing right is incredibly hard. In one of his famous memos, he writes:

Even well-founded decisions that eventually turn out to be right are unlikely to do so promptly. This is because not only are future events uncertain, their timing is particularly variable.

—Howard Marks

In other words, having the right prediction and the wrong timing is functionally equivalent to making the wrong decision.

Marks’ logic makes me very humble about my ability to succeed with the predict and time strategy.

On the other hand, when I follow the wait-and-adapt approach, I’m haunted by the famous questions from mathematician Richard Hamming:

What are the most important problems in your field?

Why aren't you working on them?

AI is the biggest invention of all time, and I’m not going all in on it. Therefore, I sometimes wonder if I will regret sitting on the sidelines for the largest invention of all time.

#2. Go all in now on using AI to do what I currently do better

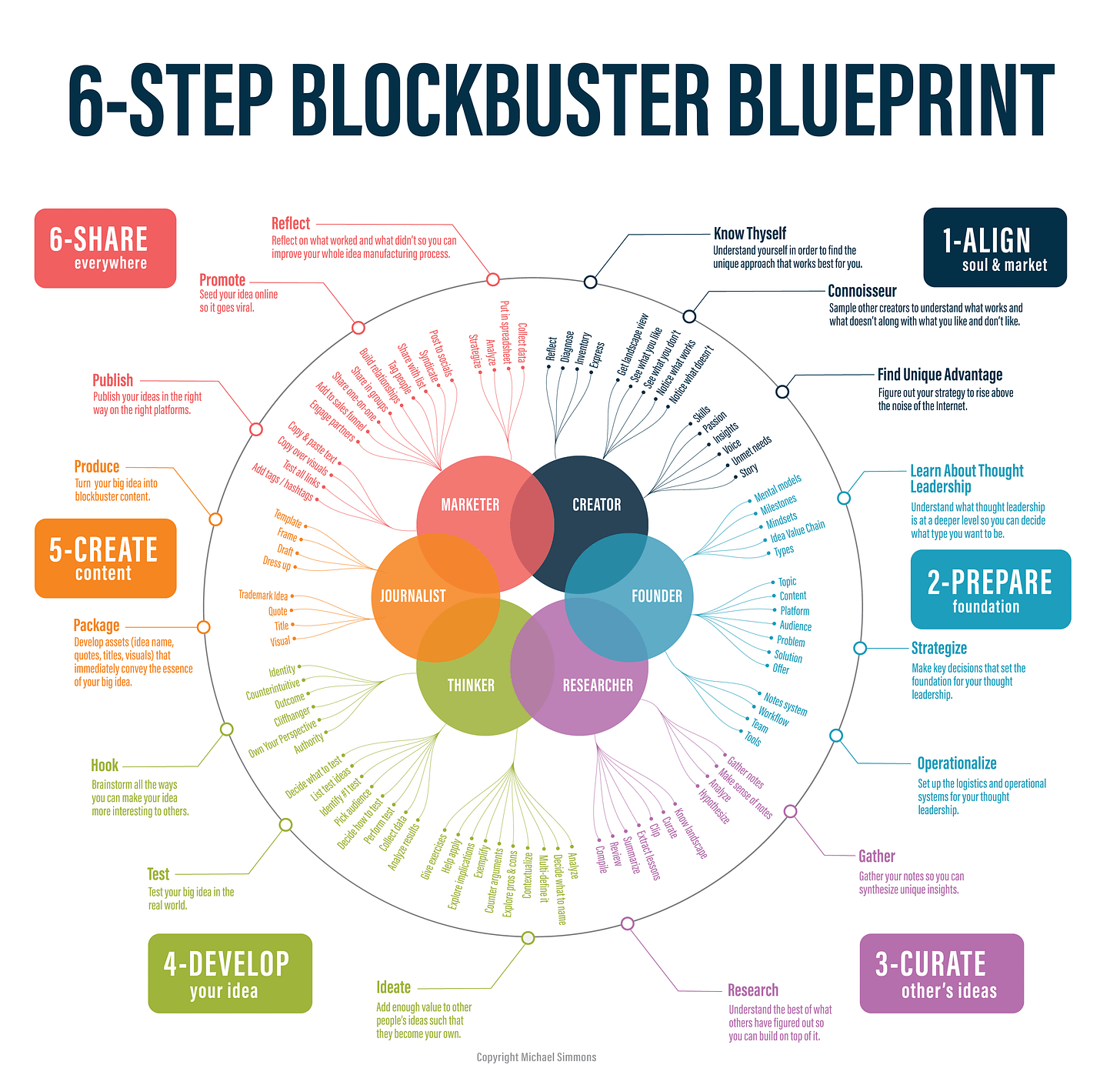

In other moments, part of me wants to go full-time into AI and explore how I can use it to do what I currently do better. While I realize that GPT-4 isn’t where it needs to be to completely revolutionize my process, part of me wants to turn the Blockbuster Blueprint (see below) into a chained set of prompts:

In this pathway, I could also see myself creating a newsletter with ChatGPT prompts and/or turning the prompts into GPTs in the ChatGPT store.

On the other hand, if I could simply push a button and have high-quality articles written, that would NOT be fulfilling for me. The #1 thing I love about thought leadership is the process of learning, thinking, and experimenting with new ideas. I appreciate the pride and authenticity I feel when I publicly share this journey with others and they find it helpful. Therefore, turning the process into something I could do with the click of a button would effectively automate away my deepest passions.

Also, in many ways, superintelligent AI would be like being given a private genie. Part of me feels like I’m not thinking creatively or large enough by just asking this genie to help me do what I currently do better.

#3. Pivot to AI safety

One of my closest friends, Emerson Spartz, has been focused full-time on AI Safety for the past three years, and the more I understand the field, the more concerned I become for the future.

In short, AI poses a serious existential risk for humanity. In other words, AI could make humanity extinct.

Before you write off this possibility as a fringe philosophy from luddites who hate technology, it’s worth noting that the people who are the most expert in technology and who were previously the most optimistic about it, are the most worried now.

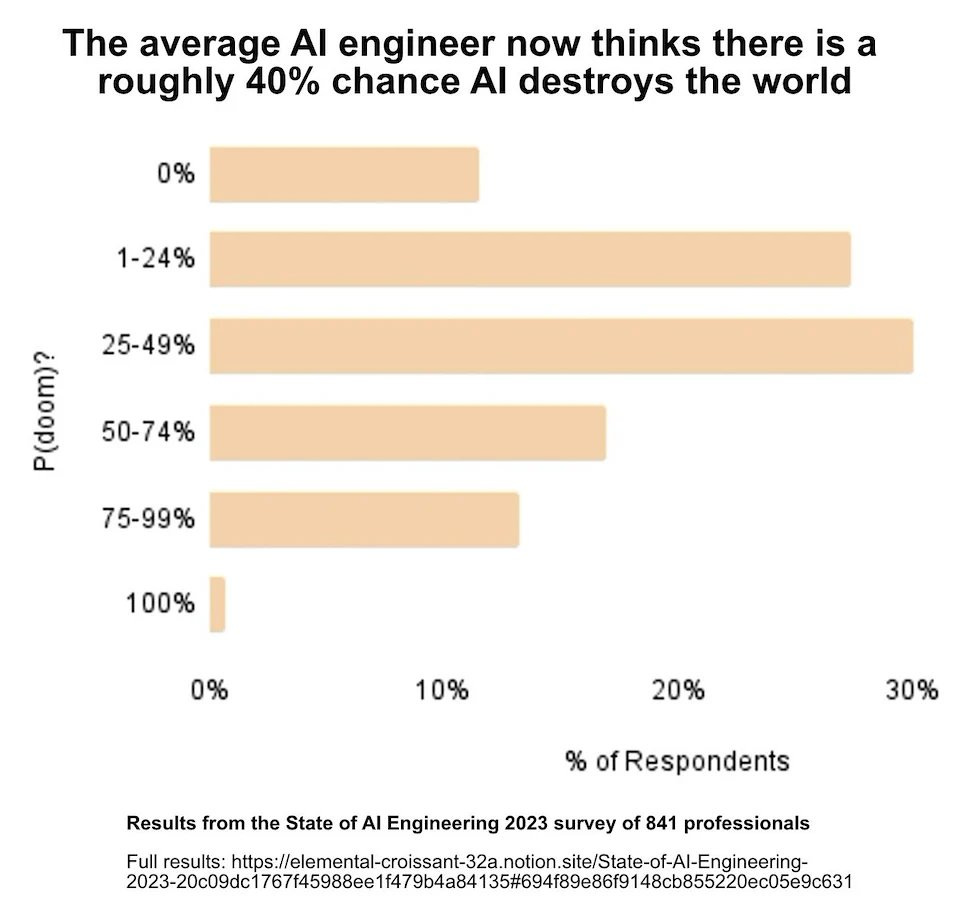

The stats below are particularly shocking…

Even more disturbing, many of the most respected AI experts in the field are just as pessimistic:

On the one hand, the probability of existential risk is so bad that part of me feels like I should pivot into AI Safety and make the largest difference I can. If we were fighting a war with such a high probability of predicted doom, everyone would be drafted to fight in it.

On the other hand, I would be starting from scratch, so I’m not sure how much of a difference I could make, and the idea of focusing all of my time on AI existential risk does not sound enjoyable.

#4. Rethink my whole career from first principles

Another part of me thinks it’s silly to do what I’m currently doing given that AGI is so close. This technology will fundamentally change what it means to be human, to live, to work, and to be a thought leader at such a fundamental level that I should almost start from scratch in my thinking.

AGI could change how we organize ourselves into governments. It could change what a career means. It could change what the good life is. It could even change what money means to us.

I ask myself…

What will the world be like with trillions of smarter-than-human autonomous agents with IQs above 1,000 which also think 20,000x faster than us?

And, as you can imagine, my mind has trouble even fathoming this question. From every AI expert I follow, their picture seems to be blurry as well. Many fill in this blurriness with either utopian or doomsday scenarios.

Rethinking everything from first principles as if I were starting from scratch feels both exciting and scary. It feels exciting because it’s a whole new possibility and adventure. It feels scary because it would be moving into a quickly changing arena where I may not be operating in my circle of competence, which means there is a higher probability of failure.

Vacillating among the pros and cons of these four possibilities feels like this…

More recently I have become fascinated by a path I haven’t seen widely talked about…

The Fifth Pathway

The current focus of most players in the AI space is to expand the capabilities of AI and then create tools to replace human labor in order to do human labor faster or cheaper.

In the past, this made a ton of sense.

Technology replacing human labor freed humans up to do other forms of labor that they enjoyed more—work that is more creative and mental.

While AGI has this quality, it also has a few other critical qualities that make it different. It will…

Happen drastically faster than any technological revolution.

Happen in every knowledge-based industry simultaneously.

Potentially replace every task that a human could do with their mind.

Another camp of players in the space is focused on accelerating human intelligence via:

Brain implants

Nanobots

Mind uploading

Genetic enhancement

Depending on who you talk to, this pathway is known as transhumanism or “The Merge.”

This pathway has been heavily talked about by futurist and Google director Ray Kurzweil who writes the following in his 2013 book, How To Create A Mind:

Moreover, we will merge with the tools we are creating so closely that the distinction between human and machine will blur until the difference disappears. That process is already well under way, even if most of the machines that extend us are not yet inside our bodies and brains.

Elon Musk sees a similar direction. Therefore, he created Neuralink to manufacture brain implants and is creating humanoid robots at Tesla. Musk writes on X:

In the long term, Neuralink hopes to play a role in AI risk civilizational risk reduction by improving human to AI (and human-to-human) bandwidth by several orders of magnitude.

When a Neuralink is combined with Optimus robot [Tesla’s robot] limbs, the Luke Skywalker solution can become real.

Even Sam Altman talked about “The Merge” in a 2017 blog post, where he also talked about genetic enhancement.

Many of the top AI philosophers and engineers today like Altman and Musk believe that “The Merge” is our #1 hope. While we should likely build to this future, it’s hard to put hope into hardware that is theoretical and 10+ years away when ASI is likely closer.

I have felt like something deep was missing from the dialogue that is practical now for everyone.

Recently, I finally got clarity on what it was…

Human Superintelligence: This Is Our Opportunity To Elevate Human Potential

When it comes to AI, we have a growth mindset. Historic amounts of resources and talent are streaming into the field based on the belief that we can create AGI.

When it comes to humans, we have a fixed mindset. There are shockingly few resources and attention going toward how we can use AI now to accelerate human potential in all of its forms to levels we can’t even imagine today:

Multiple forms of intelligence (EQ, IQ, SQ, etc.)

Dialectical thinking (overcoming polarization)

Wisdom

Love

Perspective-taking

Compassion

Empathy

Using AI as a complementary cognitive artifact (more on this soon)

Human connection

Adult development

State development

Therapy / Coaching

Morality

Spirituality / Meaning-making

Super-adaptability

In other words, while we can collectively envision and build Artificial Superintelligence that is years away, we don’t seem to be willing or able to do the same for Human Superintelligence.

And, if ASI is truly around the corner, then we need HSI now, for it is the largest challenge and opportunity humanity has ever faced.

As Albert Einstein once said, “You cannot solve a problem with the same mind that created it.” And we clearly need a higher level of thinking than we currently have on a collective level. Therefore, Human Superintelligence is a placeholder idea for human potential more broadly.

For example, there is low-hanging fruit that exists to drastically increase our collective intelligence right now, but we seem to lack the collective will or imagination to seriously push for it…

Our whole education system is fundamentally flawed and not built for today’s world—let alone the future. To adapt to what will be unimaginable change, we need to rethink education from first principles. My daughter is 15 years old, and I am surprised by how little has changed in the classroom from when I was her age nearly three decades ago. While there are perpetual conversations about school reforms, there hasn’t been any sort of vast reimagining.

We haven’t yet culturally accepted that everyone should be deliberate and diligent about learning throughout their entire life. We, as a culture, broadly speaking, are still mostly in a college paradigm that in the best-case scenario, we get formal schooling, get good grades, pay a ton of money to go to a good college, and then don’t need to go to school again or be diligent with learning throughout our lives.

A rapidly changing world calls for new skills we aren’t teaching. At a fundamental level, we need to help people become super-adapters. This may mean everything from teaching people learning how to learn, to teaching everyone emotional regulation skills, to constructing new religions, spiritualities, and worldviews that imbue every life with meaning and purpose.

There is very little attention and imagination put toward how to use ChatGPT to drastically increase human intelligence and performance. In every other field already transformed by AI (e.g., chess), the top performers use AI as a core part of their training.

We are becoming increasingly polarized rather than coming together. While diversity of views can tear us apart, it can instead enrich us and create new and better possibilities with enough skill and wisdom. In short, we need to somehow collectively learn the skill of dialectical thinking.

By no means are these problems easy to solve.

But that does not change the fact that we can solve them and we must solve them.

More so, we need new levels of imagination and curiosity into who we can collectively become.

If AI is going to be a force for the most good for the most people, then we necessarily need to expand human potential as well. In short…

We Need A Manhattan Project For Human Potential

I always thought that if humans saw an advanced alien species on its way to Earth, we would rapidly and collectively mobilize to come together in a way humanity never had before.

Like World War II, I thought that everyone would rally and think about what they could do to contribute to the whole.

And that just doesn’t seem to be happening.

Maybe I’ve just watched Independence Day too often.

Special thanks to Alina Okun, Jelena Radovanovic, and Bonnie Johnson for talking through ideas in this article.

Special special thanks to Emerson Spartz and Kelvin Lwin for talking about AI with me over the last few years. And to Anand Rao for helping me see AI as a tool for augmentation rather than just automation and coining the term Human Superintelligence and then letting me use it.

For now it seems, we push the buttons for AI. Soon it will start pushing its own. I believe all hell breaks loose when it learns to push our buttons. Imagine you're a great writer (which I'm not) and how demoralizing it will be if/when AI is far better than you.

You expect a computer to play chess better, but I think it's going to be something much different when it writes a poem that makes you cry.

This article was mind-blowing. I'm letting the impact of what you wrote about percolate in mind and later when I get the chance I'll write you a really good comment! But for now just know that you set off shock waves in my brain.