Video Source: Switch Angel

1 minute and 53 seconds. 23 “magic words”. A complete beat from nothing.

No paragraph-long prompts.

No "make it more interesting" back-and-forth.

Just precise commands—G minor scale, trance kick drum, sawtooth bass—that steer the AI like a control panel.

I’ve watched this video 10 times. Not just because the beat is good (it is). But because it shows something most AI advice completely misses.

Top AI experts aren’t just better at prompting. They speak a different language entirely.

Every field has this hidden vocabulary—a minimal set of high-leverage terms that compress expertise into commands. Musicians have it. Coders have it. Designers, writers, analysts—all fields have it.

I call these Magic Words.

Using these magic words in a loop gives a glimpse into the exciting future of high-level AI-human interaction:

Speak “domain-specific, command language” (magic words).

Respond to AI outputs (expert taste).

Iterate in fast feedback loops.

This approach breaks the paradigm of conventional human-AI interaction patterns on multiple levels:

It relies on command language (jargon) rather than conversational language.

It’s a feedback loop rather than a sequential process.

It optimizes for speed rather than depth.

It optimizes for “human in the loop” rather than complete automation.

Now, imagine doing the same in your field.

Imagine using the 80/20 of your expertise (magic words and taste) with AI to create things (ideas, art, designs, photographs, videos, articles) faster, better, and different from what you could ever create alone.

This is an exciting vision for how AI could empower us rather than replace us. It’s a future where we vibe with AI.

Now, let’s dig into what’s actually happening in the video above and what it can teach us about being an AI-empowered high-level creator...

4 Lessons About The Future Of High-Level Human-AI Interaction

Lesson #1: The Future of AI Isn’t Natural Language. It’s the Opposite.

Lesson #2: Stop Planning, Start Looping: How Experts Co-Create with AI in Real-Time.

Lesson #3: She Started Swaying—What Your Body Knows That AI Doesn't

Lesson #4: Build the Console, Not Just the Song

Lesson #1: The Future of AI Isn't Natural Language. It's the Opposite.

Every mature field has a hidden “command language” that experts use to steer reality.

These magic words are the minimal, high-leverage control lexicon, used within known constraints, to interface with AI conversationally in fast feedback loops. If you know the right words, you can create incredible things.

In the case of the video above, the structure of the words is important. Let's break it down so that you can instantly reverse-engineer the command language of any domain:

When you break down the following structure, you see the following:

A set of words: notes, scale, instruments, etc

Type: G minor, sawtooth, trance kickdrum, etc

Modifier: with acid, subtract one octave, 160ms, etc

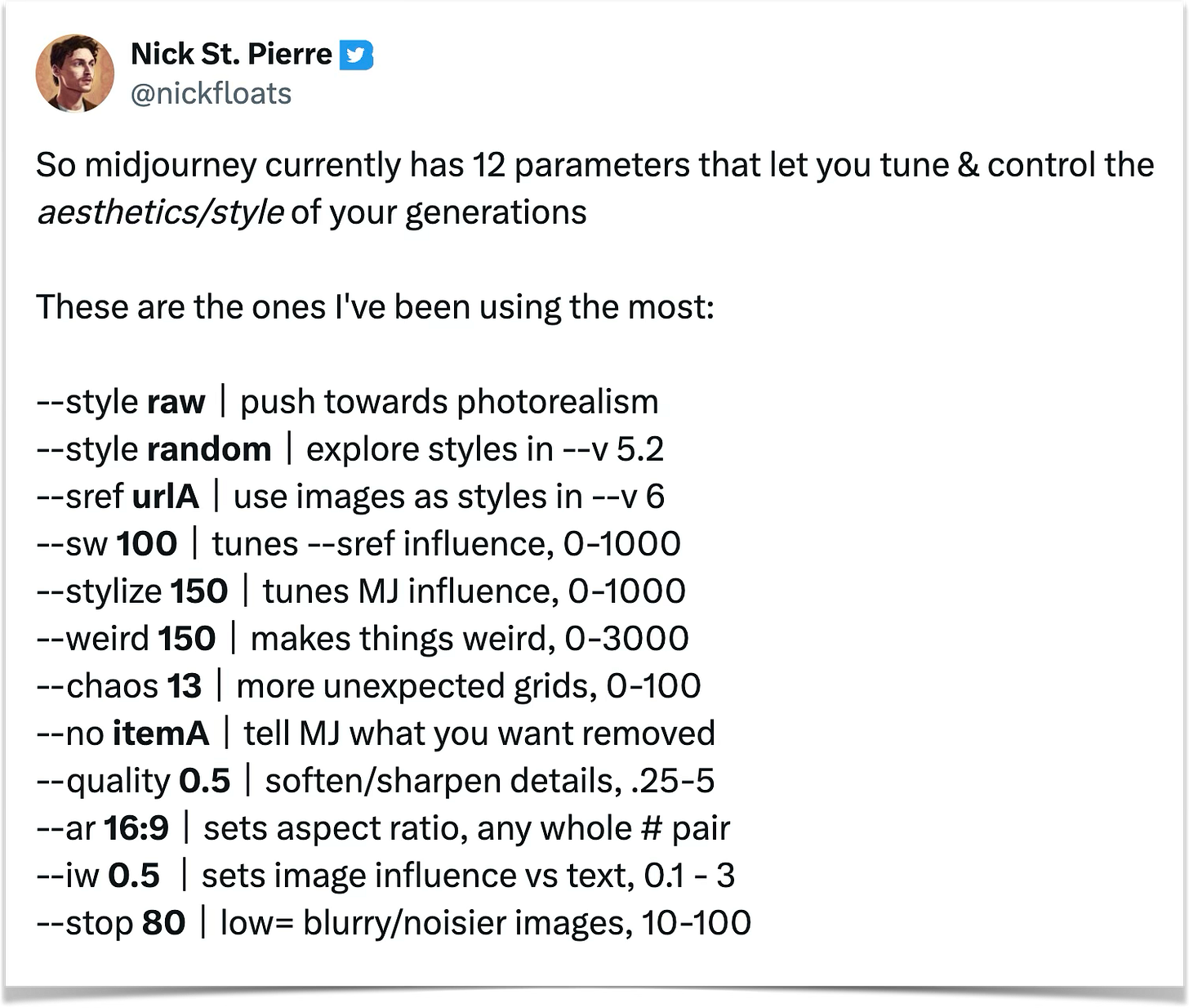

In other words, the creator isn’t just sharing words, she’s sharing parameters. The usage of words and parameters exists in other mediums. For example, with MidJourney, one of my favorite creators, Nick St. Pierre, uses the following parameters most often to get the results he wants:

Why It Matters:

Natural language evolved for:

Social coordination

Storytelling

Ambiguity

Emotional resonance

It did not evolve for:

Fine-grained control

Parameter tuning

Phase transitions

Irreversible decisions

That’s why experts don’t say:

“Make it a little better.”

They say:

“Reduce variance.”

“Increase attack to 160 milliseconds.”

“Cap downside exposure.”

“Regularize the model.”

These phrases are unnatural in everyday conversation—but perfectly natural inside the domain.

They’re compact.

They’re precise.

They map directly onto control surfaces.

The implication here is that the future of AI knowledge work may not be natural language. This is a big deal because there’s a comforting myth embedded in the current AI narrative:

The future of work is just talking to machines in plain English.

That story is partially true—and deeply misleading.

Natural language is a bridge, not a destination.

It’s what gets beginners started.

It’s not what experts rely on when precision, speed, and leverage matter.

The Shift:

Before: How do I prompt better?

After: How do I steer systems using the smallest possible set of high-leverage words?

Bottom Line:

The real future of knowledge work is not learning to speak more “naturally” to AI. It’s learning the right unnatural language for each domain.

Lesson #2: Stop Planning, Start Looping: How Experts Co-Create with AI in Real-Time

What’s Happening:

We see a real-time, fast feedback loop where:

The artist shares a handful of “magic words” (command language for AI)

The software creates and edits the music immediately

The artist vibes to the music

The music then inspires the artist to say new words, which continues the loop.

In other words, rather than "prompt and wait", it’s “aim and adjust.”

How It’s Different:

Other Example Of A Fast Feedback Loop:

Source: Nick St. Pierre

Explaining the shift, Nick St Pierre, the creator of the video, says the following in several X posts:

Midjourney working on a fast model that will generate an image grid in ~0.5 seconds. We’re moving from a “type prompt, wait, refine” pattern to something much more fluid, that makes the process less about WAITING on the system, and more about PLAYING with it. Radically different kinds of interaction on the way. Real-time, truly interactive AI tools are emerging. [February 2025]

And we’re just starting to see tools make this sort of interaction easy:

In Midjourney --v 7 using draft mode + turbo you can generate 160 images in ~42 seconds and it costs about ~$1.30 [April 2025]

This suggests we might be moving to a world with infinite AI generation + expert human taste:

As humans using these tools, our role increasingly becomes one of sifting through abundance to uncover meaning. Tt forces you to confront the tension between machine generated infinity, & our human instinct to seek significance, beauty, and worth in an endless field of options. Personally, i find this process to be quite beautiful. [December 2024]

Why It Matters:

The conventional approach treats prompts like recipes: write once, execute repeatedly. This works when you know exactly what you want.

But look what actually happens in the video at the top of this post: her second command changes based on hearing the first output. Her fifth command responds to something she didn’t anticipate. The loop isn’t just faster—it’s generative. Each cycle produces new information that shapes the next cycle and creates emergent results that couldn’t have been predicted in advance.

This is the difference between executing a plan and discovering a path.

Lesson #3: She Started Swaying. What Your Body Knows That AI Doesn't.

At the 53-mark, we see a critical threshold. She stops editing and just listens. She moves to the beat for the first time. She’s vibing. From this point on, each time she gets back the updated beat, she sways more. Until finally, she’s happy with what was produced.

She’s done in less than two minutes. All with just 135 words. Start to finish.

Why It Matters:

We’re entering an age of infinite generation. AI can create more music, images, and text than any human could consume in a thousand lifetimes.

The scarce resource is no longer created. It’s curation.

That’s what’s happening at 0:53. She’s not just listening—she’s selecting. From the infinite possibility space of ‘what could be,’ she’s collapsing it to ‘what should be.’ That collapse happens through taste—the accumulated judgment of someone who has heard enough to know what works and what they like.

The swaying is just the visible signal of that curation happening in real-time. When she sways bigger, she’s saying: ‘This is it.’

Bottom Line:

I believe that in the coming years, this skill—fast, confident aesthetic judgment—will become one of the most valuable human capabilities. It’s impossible for beginners in a domain to copy. And, for now, it’s not something that AI can do super well.

Lesson #4: Build the Console, Not Just the Song

Not all of the words tell the AI how to change the music. Some words actually create an interface that makes it easy to change parameter values.

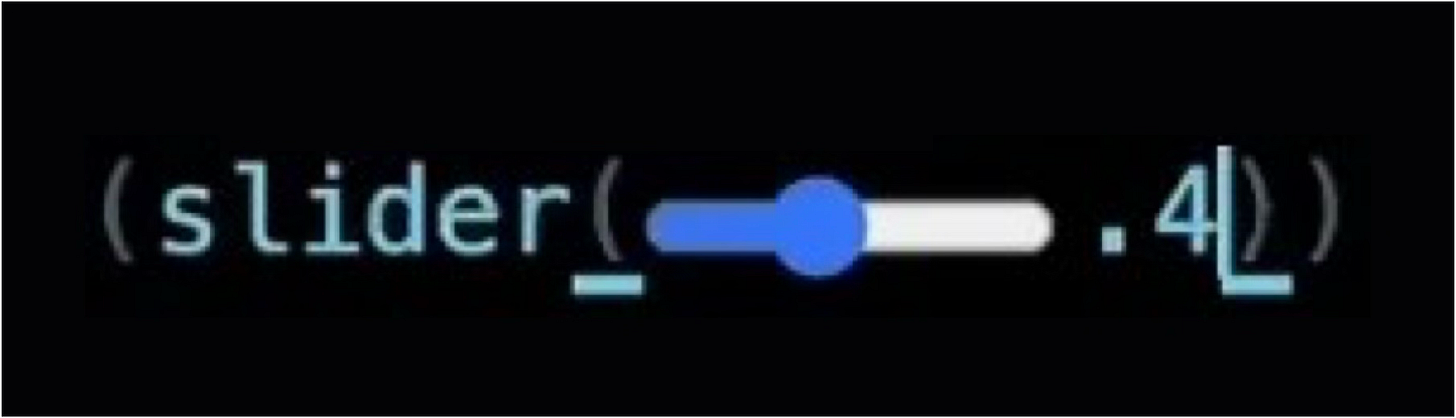

For example, when she says, “controlled with a slider,” she types in the corresponding text, and we see a slider appear:

This makes it possible for her to edit the slider with her mouse, which she does later:

My Experiment:

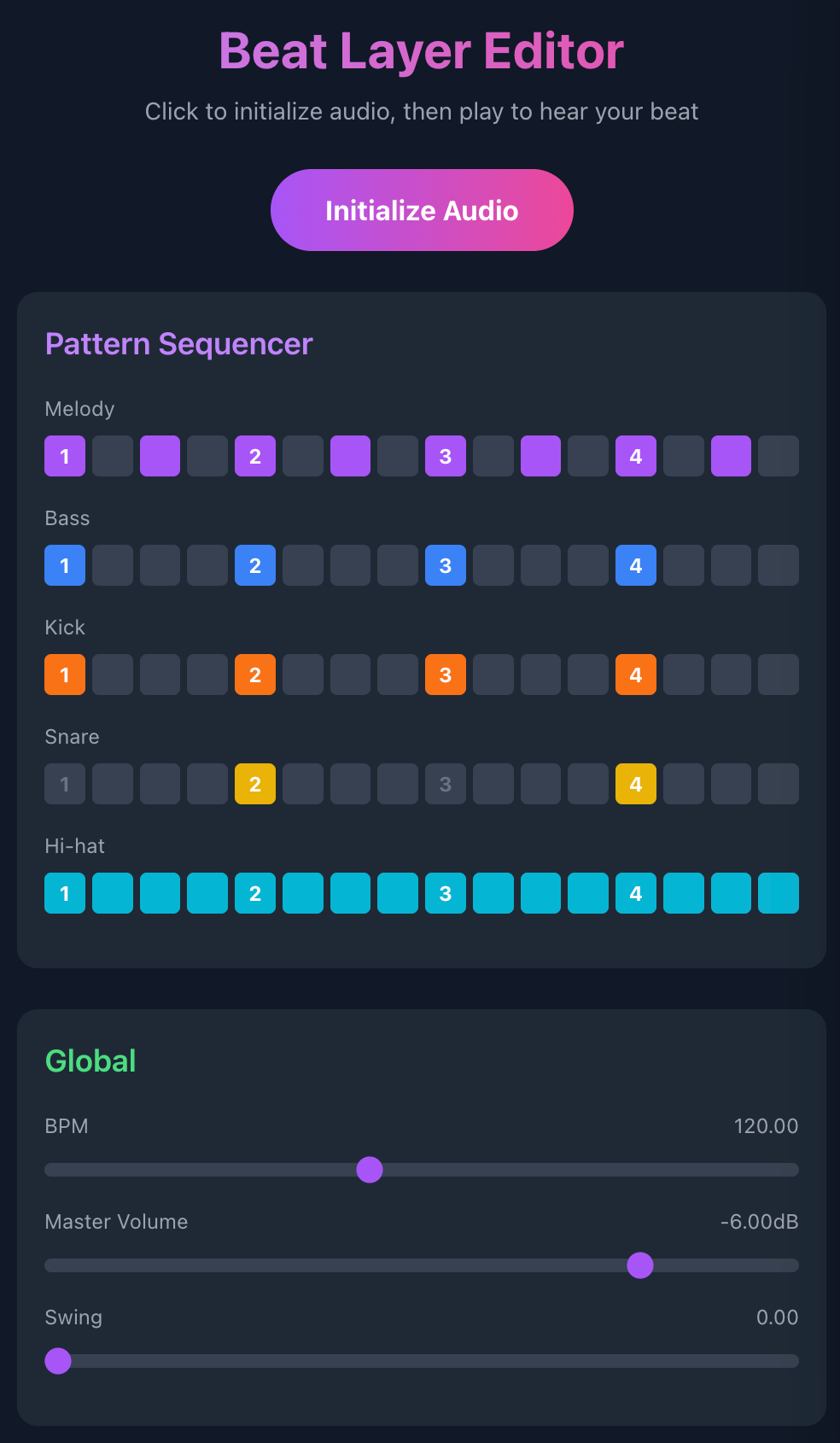

To test the feasibility of interface design in Claude, I created the following prompt:

Create an artifact where I have controls (eg, slider, etc) where I can edit the layers of a beat. I want to be able to edit the notes, waves, scale, base, etc. When I edit these, the sound beat should change accordingly.Surprisingly, it worked right away:

You can check it out here. I’m excited to explore creating something like this for the world of ideas.

Why It Matters:

This is the leap most AI users never make. Many AI experts don’t just create better outputs. They also create better tools that create outputs.

When she says ‘controlled with a slider,’ she’s not editing the music. She’s building an interface. That slider becomes a reusable control surface for future adjustments.

This is meta-creation: using AI not just to make things, but to make things that help you make things.

AI makes this meta-instinct executable. You can now tell it: ‘Build me a tool for X.’ And then use that tool to go further than you could have manually.

Conclusion: The Expert's Playbook for AI Vibe Prompting

Expert AI collaboration rests on four pillars:

Magic Words — The domain-specific command language that lets you steer AI with precision

Fast Loops — The real-time iteration that turns creation into discovery

Taste — The human judgment that collapses infinite possibilities into “this is it”

Interface Creation — The interfaces you build so you can vibe better and faster

This clip at the top of the article gives hope for the future on several levels:

It shows how the value of expertise could increase dramatically as AI improves.

It shows how human creative output could skyrocket in the coming years.

It shows how AI collaboration can produce states of flow that make work fun.

While this example is about music, it is now being applied to dozens of other areas there own version of vibe [x] with its own language:

Vibe coding

Vibe marketing

Vibe analytics

Vibe writing

Vibe design

Vibe filmmaking

Vibe research

Step #1: Find The Magic Words In Your Niche

For the first time in history, the tool for learning a domain’s command language is the same tool you’re learning to command.

In other words:

You can ask AI to help you discover the magic words

Using those words improves your AI collaboration

Which helps you discover more words

Which improves your collaboration further

∞

To facilitate this process for you, I created the following prompt. Let me know what you think in the comments…

PAID SUBSCRIBER PERK:

Magic Words Dictionary For Your Niche

Overview

The Magic Word Dictionary prompt helps you extract this hidden vocabulary for any field—including fields where this vocabulary doesn't formally exist yet.

How It Works

When you run this prompt with an AI, it generates a comprehensive dictionary of 20-30 “magic words” for your chosen field, organized into three types:

Generative Words — Terms that create new elements or bring something into existence

Parametric Words — Terms that modify or tune existing elements within known ranges

Interface Words — Terms that create control surfaces or meta-level ways to interact with systems

For each word, you get:

A precise, technical definition

Why the word matters (what problem it solves)

Valid parameters and ranges

When to use it—and when NOT to

Three examples showing basic, advanced, and combined applications

Common mistakes beginners make

How to develop intuition for the word over time

AI Model To Use

Instructions

Copy and paste the prompt below

Answer three multiple-choice questions

Read the response that AI provides

Play around with using the #1 word that captures your interest